Getting started with Chaos Engineering on Kubernetes

Chaos Engineering on Kubernetes

Kubernetes is built for high reliability. Mechanisms like ReplicaSets, Horizontal Pod Autoscaling (HPA), liveness probes, and highly available (HA) cluster configurations are designed to keep your workloads running reliably, even if a critical component fails.

However, this doesn't mean Kubernetes is immune to failure. Containers, hosts, and even the cluster are susceptible to many kinds of faults. These faults aren't obvious, and because of how dynamic and complex Kubernetes is, new and unique faults can emerge over time. It's noIt'sough to configure your clusters for reliability: you also need a way to test them.

In this article, we explain how you can use the practice of Chaos Engineering to proactively test the reliability of your Kubernetes clusters and ensure your workloads can run reliably, even during a failure.

Why you need Chaos Engineering on Kubernetes

While Kubernetes is designed for reliability, that doesn't mean reliability is guaranteed. There are still many ways that a Kubernetes cluster can fail. We'll explore three causes of failure in particular:

- Complexity

- Configuration

- Cluster management

Kubernetes is complex

Kubernetes is a versatile tool with countless use cases, features, and functions. While this complexity makes it more useful for different teams, it impedes reliability. Every feature and module in a system is a potential point of failure, which is why smaller (e.g., less complex) systems are often easier to test.

For example, Kubernetes uses a complex algorithm to determine where to deploy new Pods. Teams new to Kubernetes might not understand how this algorithm works. They might scale a Deployment to three Pods and assume each Pod will run on a separate node. However, Kubernetes uses a variety of factors to choose a node, which could result in all three Pods running on the same node. This eliminates redundancy benefits since a single node failure will take down all three Pods and the entire application.

Teams also need to know how to respond to problems with their Kubernetes cluster. An unclear failure in an obscure component could take hours to track down and troubleshoot if engineers aren't familiar with Kubernetes. This is why teams adopting Kubernetes should use Chaos Engineering: as they run tests, they learn more about how Kubernetes could potentially fail and how to address those failures if they happen again.

Misconfigurations can impact reliability

Despite its many automated systems, Kubernetes expects engineers to know how to configure it correctly. You declare which applications to run and specify the parameters for running each application, and Kubernetes tries to fit those parameters best. If anything is missing from your declaration, Kubernetes will take its best guess at how to manage the application best. This can lead to unexpected problems, even after successfully deploying your applications.

For example, Kubernetes lets you limit the resources allocated to your applications (e.g., CPU, RAM). Without these limits, workloads can consume as many resources as needed until the host is at capacity. Imagine if a team deploys a Pod with a memory leak or requires significant resources, like a machine learning process. A Pod that starts off using a few hundred MB of RAM could grow to use several GB, and if you don't have limits set, Kubernetes may terminate the Pod or evict it from the host to allow other Pods to continue running.

These types of Kubernetes configuration mistakes are common but can be detected using proactive testing with Chaos Engineering.

Bad node management can lead to downtime

Kubernetes clusters have two types of nodes: worker nodes, which run your containerized workloads, and master nodes, which control the cluster. Ideally, all of these nodes should be replaceable. Workloads should be free to migrate between nodes without being tied to a specific one (except for StatefulSets), and failed nodes should be replaceable without downtime or data loss. This avoids having single points of failure and makes scaling much easier.

Problems can occur with this setup, though. Small, non-scalable, or misconfigured clusters might not have enough resources to accommodate the workloads you're you're to deploy. Nodes can have affinity and anti-affinity rules that prevent certain Pods from running on them. Nodes can also suffer from "noisy neighbors," where workloads consume a disproportionate amount of resources compared to others.

None of these are problems specific (or even unique) to Kubernetes, but engineers must still be aware of them when configuring clusters.

Other things can go wrong

Sometimes things happen that we can't anticipate:

- What happens if a worker node has a kernel panic and fails to reboot, leaving it stuck in an unavailable state?

- What if a Pod enters a

CrashLoopBackOffstate and Kubernetes can't automatically reschedule it? - What happens if a majority of our master nodes fail? Can they rejoin the cluster without any issues, or will that result in a split-brain cluster?

Problems might not become apparent until the system is already live in production and experiencing load. Testing stability, scalability, and error handling without real-world traffic patterns is hard. This is another reason why proactive reliability testing is crucial.

Remember that the benefits of Chaos Engineering on Kubernetes aren't exclusively technical. As engineers test their clusters, identify failure modes, and implement fixes, they're also learning how to detect and respond to problems. This contributes to a more mature incident response process where engineering teams have comprehensive plans for detecting and handling issues in production. Chaos Engineering lets teams recreate failure conditions so engineers can put these plans into practice, verify their effectiveness, and, most importantly, keep their systems and applications running.

Challenges with Chaos Engineering on Kubernetes

Chaos Engineering on Kubernetes has many benefits, but teams often hesitate to run Chaos Engineering experiments for several reasons.

For one, Chaos Engineering can potentially uncover many Kubernetes-specific issues that teams aren't aware of. Discovering one problem could lead teams to find other problems, adding to the backlog of issues to address.

Additionally, while engineering teams are likely fine with running Chaos Engineering experiments on pre-production clusters, they're less willing to run experiments on production clusters. According to the 2021 State of Chaos Engineering report, only 34% of Gremlin customers are running chaos experiments in production. The problem is that production is a unique environment with unique quirks and behaviors. No matter how much teams try to replicate it in a test or staging environment, it'll never be quite the same. The only way to ensure your clusters are reliable is by running experiments in production.

Even though pre-production won't accurately reflect production, it's still a good starting point, assuming you have a well-developed DevOps pipeline. Start by running Chaos Engineering experiments in pre-production and addressing issues as you find them. Many of your fixes will likely carry over into your production environment. As you learn more about configuring Kubernetes and safely running Chaos Engineering experiments, start moving your experiments over to production. This ensures that you're testing and finding production-specific failure modes.

Getting started with Chaos Engineering on Kubernetes

Once you're ready to run Chaos Engineering experiments on Kubernetes, here are the steps you need to follow:

- Define your Kubernetes Chaos Engineering tests

- Select a Chaos Engineering tool for Kubernetes

- Set up your environment

- Run tests and collect results

Define your Kubernetes Chaos Engineering tests

Deciding which tests to run can be difficult for engineers new to Chaos Engineering. Chaos Engineering tools come with many tests, each with several customizable parameters. How do you determine which test to run and where to run it?

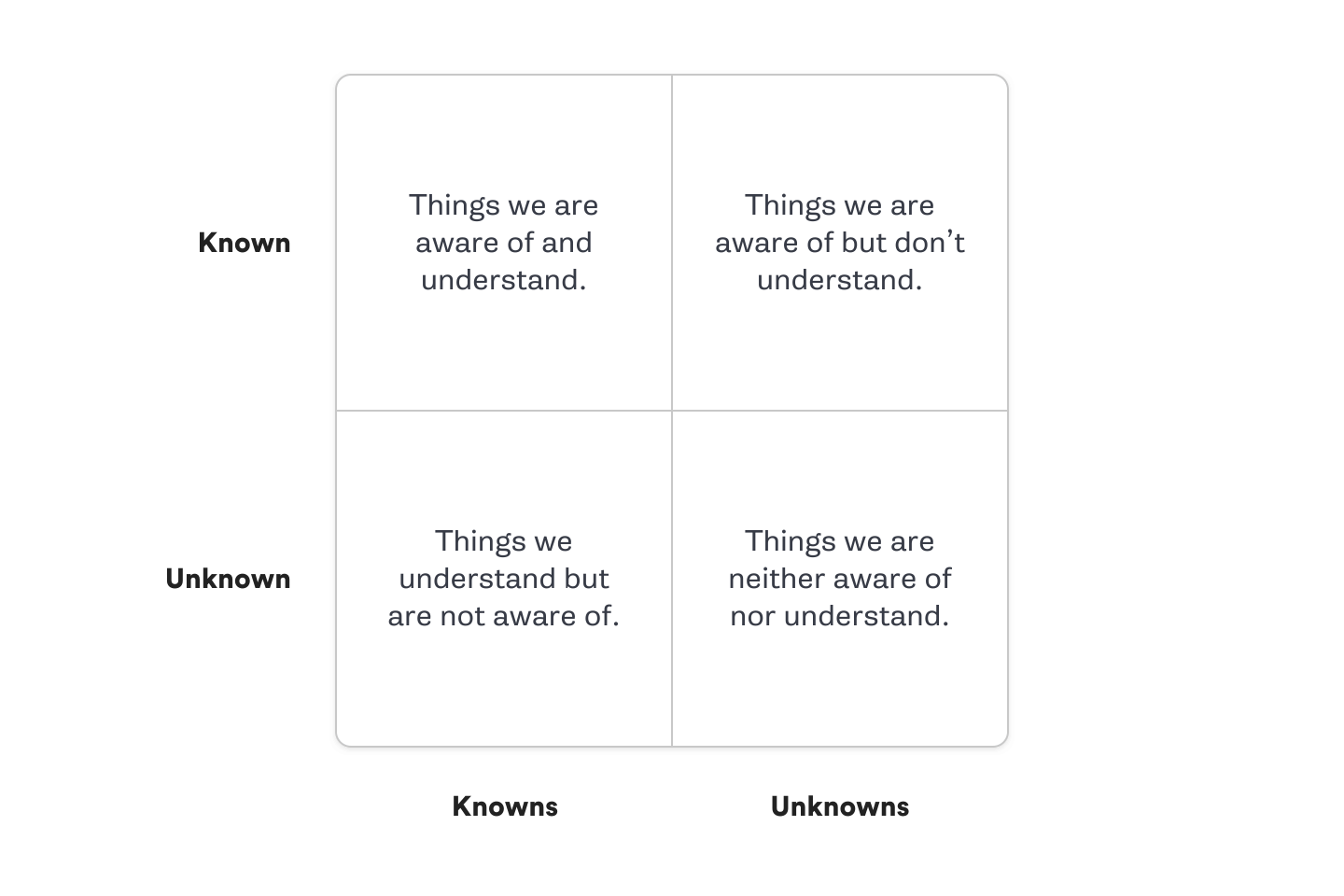

The best way to start is by separating potential issues into four categories:

- Known Knowns: Things you are aware of and understand.

- Known Unknowns: Things you are aware of but don't fully understand.

- Unknown Knowns: Things you understand but are not aware of.

- Unknown Unknowns: Things you are neither aware of nor fully understand.

While learning, focus on the "Known Unknowns" quadrant. These are characteristics of Kubernetes that you're aware of but don't fully understand. For example, you might know that Kubernetes uses several criteria to determine which node to schedule a Pod onto, but without knowing exactly what those criteria are. As you build your Chaos Engineering practice, gradually branch into other quadrants.

You can start by testing these common failure vectors:

- Network exhaustion: What happens to your workloads when your network is experiencing high traffic or latency?

- Pod failure: What happens to your application when a Pod fails? Does Kubernetes automatically restart the Pod? Does it migrate to a different node? Do you have enough replicas available so this failure doesn't take your application offline?

- Node failure: What happens when one of your nodes fails? Does Kubernetes automatically restart the Pods on a different node? Do you have enough extra capacity in your cluster to handle this migration? Does your cluster automatically restart the failed node or provision a new node, or does an engineer need to intervene manually?

- High CPU load: What happens when one of your Pods uses excessive CPU time? Does this cause other Pods to slow down (i.e., a noisy neighbor)? Does Kubernetes migrate it to a host with more CPU capacity? Does Kubernetes evict it?

- Memory exhaustion: What happens if a Pod has a memory leak? Does it keep consuming RAM until it crashes? Does the node (or Kubernetes itself) crash? Or does Kubernetes stop/evict the Pod before that happens?

- New deployment failures: What happens if we deploy a new application while the cluster lacks capacity? Does the cluster automatically scale? Does Kubernetes hold the application in a pending state while scaling, or does it prevent the application from deploying?

- Horizontal Pod Autoscaling (HPA): What happens to a deployment when it reaches its resource limits? Does Kubernetes scale it based on its HPA rules? Can the deployment scale fast enough to handle the incoming load, or does the original Pod crash?

- Container startup failures: How does Kubernetes manage Pods that crash on startup? Can it restart them successfully, or does it leave them in a

CrashLoopBackOffstate? - Dependency failures: What happens to a Pod when it can no longer contact a dependency, such as a database? Did you build in sufficient retry and fallback logic, or are there unexpected and unhandled issues that arise?

This isn't an exhaustive list, but it presents many of the most common problems teams encounter with Kubernetes. As you look at each failure vector, consider whether your team has encountered this before and whether you've implemented fixes for it. If not, Chaos Engineering helped reveal a potential issue you now understand well enough to address. If so, Chaos Engineering is a great way to validate your fixes.

How to select a Kubernetes Chaos Engineering tool

There are several Chaos Engineering tools for Kubernetes, including (but not limited to): Gremlin, Chaos Mesh, LitmusChaos, and Chaos Toolkit. How do you decide which one to use? There are a few qualities you should look for when comparing Chaos Engineering solutions for Kubernetes:

- Ease of use: How hard is it to use the product? How many steps does it take to run your first chaos experiment?

- Metrics and reporting: What kind of feedback does the solution provide? Does it give you actionable output based on the results of each experiment, or does it simply tell you whether the experiment is finished? Does it provide an analysis of the results, such as recommendations or scores?

- Fail safety and recoverability: Does this tool provide an "emergency stop" feature to halt and roll back ongoing experiments? How long does it take to revert your systems to normal? Does it have built-in failure-safety systems that will stop an experiment if it detects something went wrong (e.g., the tool lost connection to the systems it's testing)?

- Support for different fault types: What experiments can you run using this tool? Does it support the platforms you use besides Kubernetes? Do the different fault types give you everything you need to perform your desired tests? Does the tool let you define custom faults or workflows?

How to set up your Kubernetes environment for Chaos Engineering

Unfortunately, starting with Chaos Engineering isn't as simple as installing a tool and clicking "Run." Some additional steps are needed before you can get the most value out of Chaos Engineering.

First, you need observability. You'll need insight into your systems' behaviors to accurately determine an experiment's impact. Before running an experiment, observe the system under normal conditions. This lets you understand how the system behaves without any added stress. You'll want to collect a combination of the following:

- Node and Pod metrics, such as CPU, RAM, network, and disk utilization. These tell you how well your cluster performs and whether it's reaching its capacity.

- App-specific metrics, such as request latency, request throughput, and error rate. These tell you how well your application is performing from the perspective of your end users.

Second, create a process for informing other teams and stakeholders in your organization that a Chaos Engineering event is underway. This serves two purposes:

- It warns those teams that any unusual or unexpected behaviors might not be actual incidents but temporary side effects of the experiments.

- It invites other teams to contribute to the experiments. For example, if another team already knows how Kubernetes responds to certain types of tests, they could help design the test parameters.

Third, have a rollback or recovery plan on-hand, especially when testing in production. Have a way to stop any active experiments quickly and, if necessary, roll back any changes to your systems. Share this plan with your teammates and ensure everyone knows what roles they must fill if the plan ever goes into action. Additionally, make the plan available to other teams, so they know there's a process in place. Even if you're testing in pre-production, build a plan anyway since pre-production is a great place to test the effectiveness of a plan without impacting real customers.

Run tests and collect results

When you're ready to run tests, double-check to make sure you have everything in place:

- Do you have your baseline metrics to compare against?

- Is your monitoring/observability tool open and showing your key metrics?

- Do you have your emergency response plan on hand?

If you have all these, you can start running your test(s). While the tests run, monitor your key observability metrics, especially customer-impacting metrics. Be prepared to stop the test and roll back its impact if you notice a significant or sustained drop in customer-facing metrics.

After the test, review your observations before coming up with a conclusion. Ask questions like:

- Did your systems pass or fail the test according to your metrics?

- How significantly did your metrics change compared to the baseline? Was this more or less significant than you expected?

- Did the test cause changes in any unexpected metrics? For example, did any seemingly unrelated alarms fire as a direct result of your test?

- Did the test complete and your systems return to normal, or did you have to use your emergency response plan? If you did use the plan, did it work as expected? Do you need to adjust it so it's more effective/easier to follow?

Based on these answers, define a plan to remediate any issues in your systems or processes. Remember: this isn't just a test of your systems but a test of your engineers.

After implementing these fixes, repeat your tests to vet your changes. Repeat this process to maintain reliable systems.

The Gremlin approach to Chaos Engineering on Kubernetes

Kubernetes is core to many organizations' infrastructures, but it's only one part. After you've tested its reliability, how do you move on to the rest of your infrastructure and test everything?

Gremlin supports Chaos Engineering on Kubernetes, hosts, containers, and services. With Gremlin Reliability Management (RM), you define your service, and Gremlin automatically provides a suite of pre-built reliability tests that you can run in a single click. These tests cover the vast majority of reliability use cases, including:

- Scalability while under heavy load.

- Redundancy, or the ability to continue operating even when one or more hosts, containers, or even cloud regions goes offline.

- Resilience to slow or unavailable dependencies, such as databases.

For teams looking to run more advanced tests, Gremlin Fault Injection (FI) lets you run custom Chaos Engineering faults such as packet loss, process termination, disk I/O usage, and more. You can also create custom fault injection workflows for creating complex scenarios, such as cascading failures.

In both cases, Gremlin provides a single pane of glass view into all your reliability tests across your infrastructure. You and your team can easily monitor tests, see the security posture of each of your services, and even halt and roll back tests with just a single click.

If you're ready to try Gremlin, sign up for a free trial by visiting gremlin.com/trial. If you want to learn more about Gremlin, get a demo from one of our reliability experts.

Deploy to Kubernetes with confidence

Case Studies

Tutorials