Using Observability to Automatically Generate Chaos Experiments

Lightstep and Gremlin recognize that reliability is a huge challenge for software organizations and align on the perspective that the value of software decreases as reliability degrades. We’ve built observability and Chaos Engineering solutions respectively to help SREs and platform engineers optimize and debug complex and distributed software systems so that their teams can build more reliable products, fast.

Unlike traditional APM, Lightstep’s observability platform presents developers with deep insights into what changes occur in their systems. Rather than querying or grepping logs, developers immediately have insights to help get to the root cause.

Chaos Engineering is the practice of performing intentional experimentation on a system by injecting precise and measured amounts of harm to observe how the system responds for the purpose of improving the system’s resilience.

We can observe the impact of a chaos experiment on any monitoring dashboard, but what if we could apply observability data to inform what chaos experiment to perform in the first place? Using tracing data, we can scale our attacks to target not just the service itself, but all dependencies down the line. This helps us simulate cascading latency and builds reliability across all services and teams across our applications.

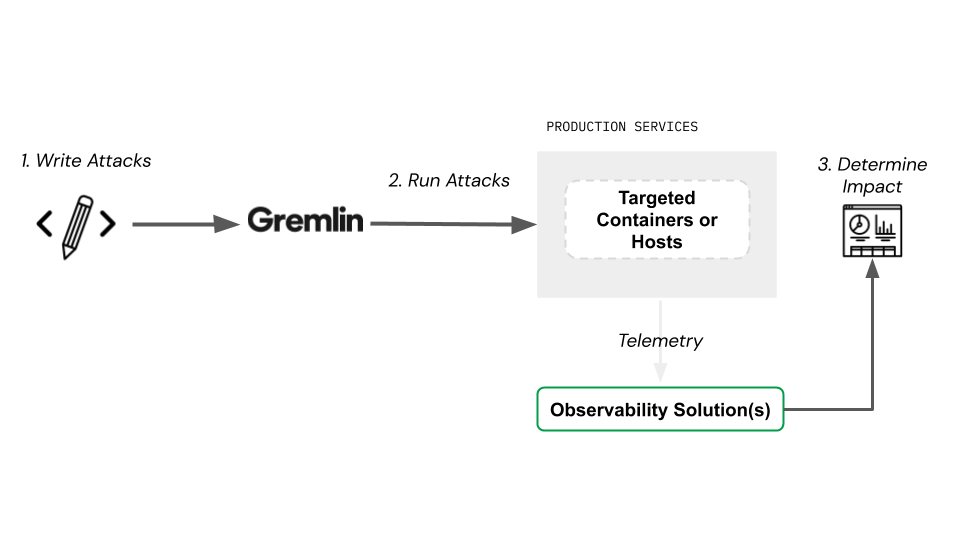

When combined with Gremlin as part of a chaos experiment, Lightstep works in two ways

- Report where a service was impacted and why (Image 1)

- Inform how to create the next chaos experiments (Image 2).

With a little bit of code, it’s possible to create chaos experiments automatically from observability data.

Observability used to validate a chaos experiment hypothesis

A developer designs an attack based on their understanding of how things are working in production. Gremlin manages the attack targeting hosts, containers, Kubernetes, or applications depending on the service architecture. The impact or change is measured by various types of telemetry (metrics, logs, traces). An observability solution, dashboard or CLI is used to understand what actually caused the change.

Observability used to design chaos experiments

Service relationships in a distributed system are a perfect place to test latency and errors. Distributed traces describe the latency and error properties of service-to-service relationships in a system. With this context we can use traces to automatically and programmatically define chaos experiments.

Service diagram to visualize service to service interactions

With distributed traces, Lightstep creates a full service diagram illustrating the relationship between all the services in your system: from the frontend to all the downstream services.

Snippet to automate generating chaos experiments

Developers can use the data that powers the service diagram, the traces from the Lightstep Streams API, to infer relationships between services in their system and the typical duration of requests between services.

For example, using a trace collected from Lightstep’s Hipster Shop microservice environment, we see that the frontend service depends on four downstream services. One of those services, in turn, depends on another service. Using Lightstep’s API, we can automatically build a table of service relationships and the duration of requests between those services:

1{2 'frontend->currencyservice': 280,3 'frontend->cartservice': 753,4 'frontend->recommendationservice': 2820,5 'recommendationservice->productcatalogservice': 46 'frontend->productcatalogservice': 4,7}

With this data, it’s possible to generate latency experiments in Gremlin that simulate realistic worst case scenarios based on the architecture of the system itself. In our script, available on Lightstep’s GitHub, here’s code that generates a “10x” latency attack on all downstream dependencies of the frontend service that takes the normal service-to-service request latency and multiplies it by 10.

“10x latency” is an extreme example, but it’s possible to see how gradually tuning this value can help developers understand how even small changes can lead to unexpected failures when working with dozens of services that depend on each other.

1for (var downstream of relationships.frontend) {2 const latencyx10 = duration[`frontend->${downstream}`] * 103 console.log(` creating latency attack for service ${downstream} with latency ${latencyx10}ms...`)4 const attackId = await createGremlinLatencyAttack(downstream, {5 latencyMs : latencyx106 })7 console.log(` created attack ${attackId} !`)8}

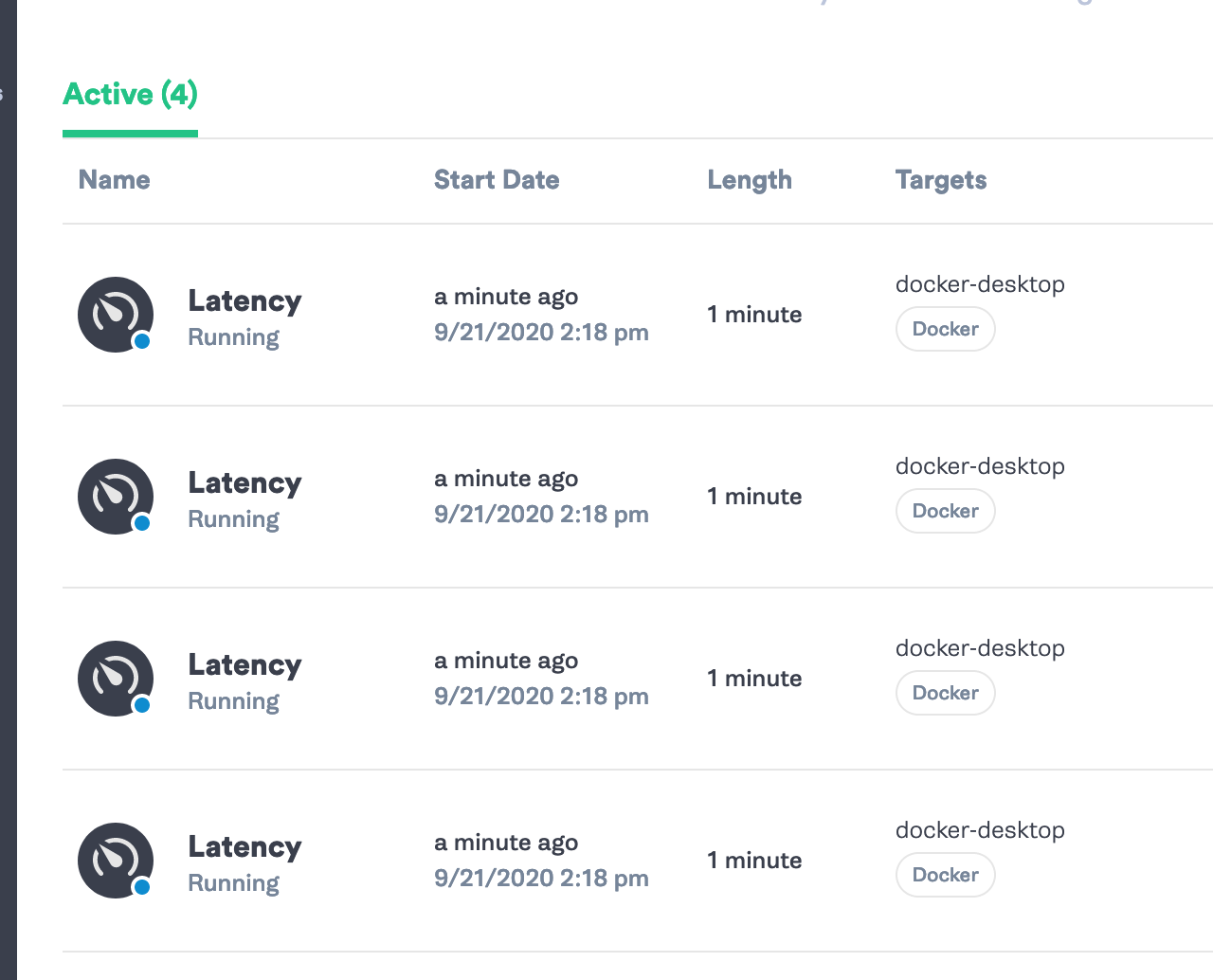

As the script runs, we see the attacks being created using the Gremlin API in the output:

Generated chaos experiments in Gremlin

Lightstep traces define new attacks that inject latency between the different services along the trace. The attacks start running together, showing the impact of cascading latency in a system.

Observe the impact of the experiment

When we look at our Lightstep dashboard, we can see the latency spikes when we view the stream for the frontend service—a few seconds of latency added here and there adds up fast, and some requests now take over 20 seconds.

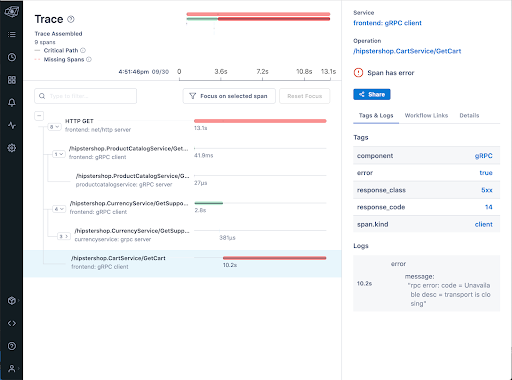

If we hover the mouse over the timeseries chart, we also see associated traces collected during that time window, and it seems like our chaos experiment caused some new errors, seen here in red:

If we click into a trace on the dashboard, it’s possible to understand how each service we created the latency attacks for is causing the massive spike in latency. In this case, we start seeing lots of errors caused by increasing the latency. Drilling down into a trace, we see the error log associated with (now failing) requests to the cart service—increasing the latency 10x caused a new kind of timeout error we haven’t seen before:

This is exactly the sort of failure that we want to uncover with Chaos Engineering, and we were able to use Lightstep's tracing data to automatically generate the experiment for us.

Combining Lightstep trace data with Gremlin's Chaos Engineering platform gives engineers a powerful solution to understand the impact of failure modes percolating across our environment, such as in cascading latency scenarios. This helps us better understand the complexity of our applications by planning chaos experiments that are informed by trace data so we can build confidence in the performance of our systems during failure modes.

How can you try this out?

- Watch the demo

- Check out Lightstep / Gremlin Learning Path for code details

- Check out the example script on GitHub

- Request a free Gremlin trial

- Try Lightstep for free for 14 days

Related

Avoid downtime. Use Gremlin to turn failure into resilience.

Gremlin empowers you to proactively root out failure before it causes downtime. See how you can harness chaos to build resilient systems by requesting a demo of Gremlin.

Get started