Gremlin Gameday: Breaking DynamoDB

By now, you might have read previous blog posts on running a Gameday. Better yet, your team has run a Gameday and learned something new about their services’ behavior during failure scenarios. But you might wonder, “What happens when Gremlin runs a Gameday on itself?”

To show you how we do this, we’ve put together a breakdown of our most recent Gameday. Of course, no Gameday would be complete without shattering our own assumptions about our system, so we’ll share some bugs we uncovered in our API service and how we fixed them.

Before we get into the nitty-gritty, Gremlin believes that the spirit of a Gameday is to challenge assumptions about a given service or distributed system through concrete discussion and thoughtful experimentation. Gamedays are not just for large organizations, or for dedicated SRE teams. Every team holds assumptions about a system’s behavior, and Gamedays are a useful tool for testing the assumptions you’re aware of, AND the ones you took for granted.

Assumptions aren’t all bad. Most of the time, they help us reason through our daily tasks without getting bogged down by a system’s complexity. But sometimes making the wrong assumption can blind us to problems that rear their heads during a 3am outage.

We want everyone to join in

We open our Gamedays up to the whole company (this is of course easier at a startup than a larger company). Inclusivity increases the diversity of perspectives, and expands the number of unique observations about our systems. Don’t be afraid to let wider teams participate. Everyone has a role to play when it comes to observing system behavior and identifying trends, and some of Gremlin’s team members have participated in successful Gameday events with hundreds of participants.

Gremlin’s Gameday — Let’s Break DynamoDB

Gremlin uses DynamoDB for its persistence layer for all stateful interactions with the API, including client operations that deal with launching attacks, updating templates, and registering new clients. DynamoDB is a solid choice for our elasticity requirements (we love autoscaling!), but this crucial dependency means any failures translate to wide-reaching impacts on Gremlin — and although Amazon built DynamoDB on top of years of experience with distributed hash tables, no system is too scalable to fail. We want to make sure we fail gracefully, preventing inconsistencies in state and ensuring we are transparent to our users.

Let’s break stuff

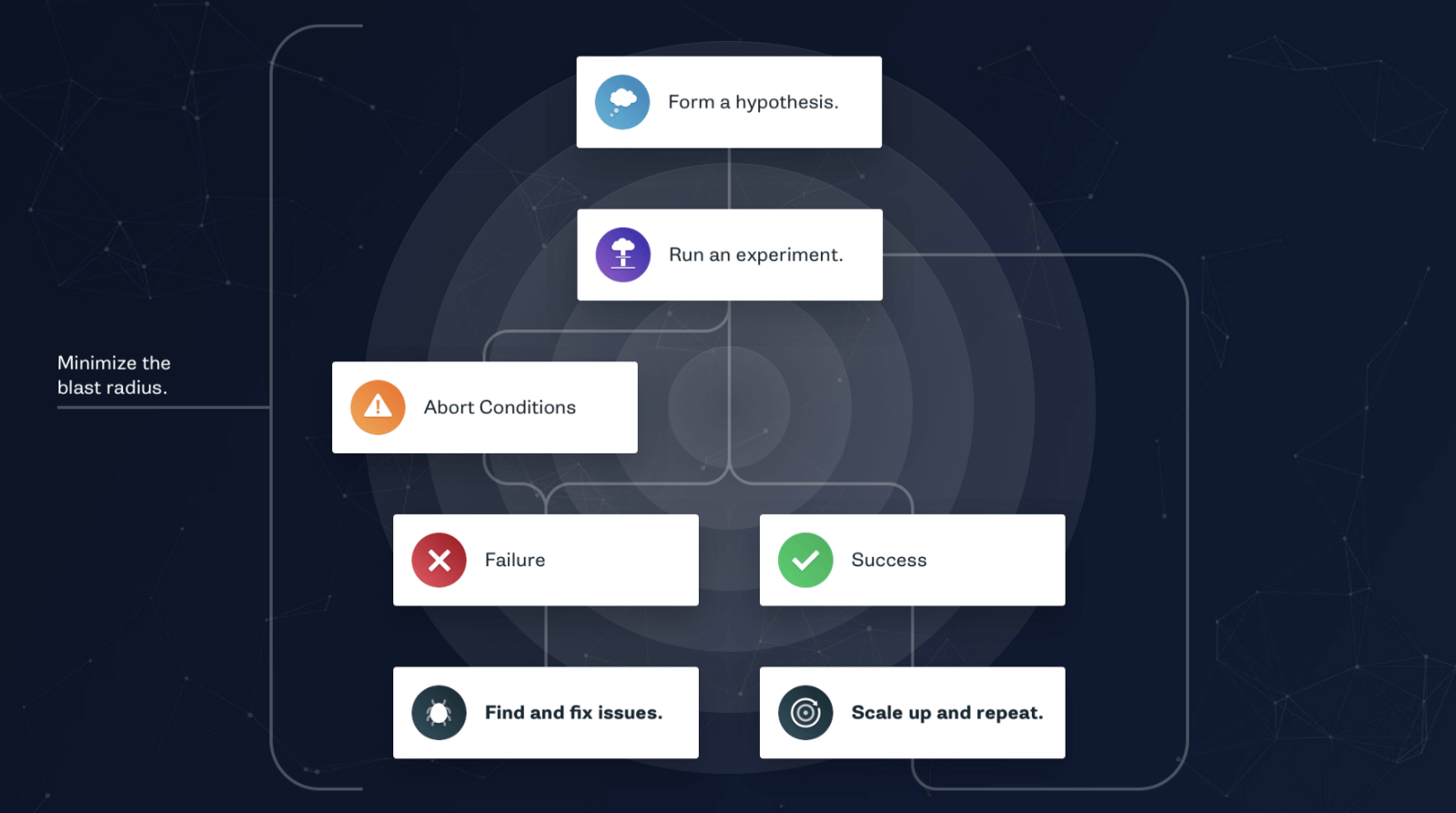

The cadence of our experiments follow the “Chaos Experiment Lifecycle,” which is all about turning our assumptions about a system into hypotheses and then carrying out experiments that test them.

Begin with a Hypothesis

At Gremlin, we formulate these hypotheses through a brainstorming session around “What could go wrong?” This session takes a lot of time. If you have tooling to help inject failures (like they are at Gremlin), the discussions, whiteboarding, and note-taking can be the most time-consuming part of a Gameday. That’s ok though because it’s also the most valuable artifact, partly because so many questions get presented to the whole team:

- So what do we think will happen when DynamoDB is unreachable? What does unreachable mean?

- What sort of Exceptions do we expect our SDK to throw? Are we catching those explicitly?

- What sort of API response is going to come back from these failures? What will the UI look like when displaying these responses?

The biggest output of the discussion isn’t just talking, it’s a written record of outcomes (Fill out the chart). For example, here’s the output on our discussion around “What can go wrong when DynamoDB is unreachable?”

- Failure Scenario: DynamoDB is unreachable

- Method: Run a Blackhole Gremlin on the EC2 instances hosting our API (drop all outgoing packets)

- Scope: Blackhole should impact port egress traffic to dynamodb.us-west-2.amazonaws.com:443

- Measurement: Datadog should report drop in requests per second, spike in HTTP 5XX errors

- Abort Conditions: We should not exceed 5% of API failures. In the event that we do, whoever is on call should get paged immediately. (The expectation that our on-call will get paged is another assumption that we’re testing)

- Abort Procedure: Stop the effect immediately — halt the Blackhole Gremlin

- Expected Measurements (hypothesis): Increase in HTTP 5XX errors due to DynamoDB client timeout errors

- Expected Observations (hypothesis): details around timeouts should be returned from the API responses

Experiment

As stated above, we want everyone participating in the Gameday to have a role. So in addition to our hypothesis discussion, we also decide what real-time tests we want to carry out during the failure scenarios and who will be responsible for each test.

- UI Engineer: login/logout behavior

- Client Engineer: client registration behavior from the CLI

- API Engineer: API response times and failure metrics in datadog

- Security Engineer: authentication workflow

When we apply the DynamoDB failure scenario, we want to do so in well-defined durations, starting with something short-lived and increasing duration when we need to observe an effect for a longer period of time. This allows us to iterate quickly, validating correct behaviors and dialing up our blast radius to yield more results until we discover something novel about our system’s behavior. I.e., we discover a coupling that we can resolve!

We also started our Blackhole experiments against a single instance of our API server for 60 seconds, which yielded intermittent failures across all of our predefined tests as we expected.

Wait, that’s not supposed to happen

The interesting behavior only started happening once we applied impact on an entire availability zone for 10 minutes. Only after we had a large enough blast radius to fail hundreds of requests, our API autoscaling group started to behave unexpectedly: impacted EC2 instances started terminating due to ELB health check failures.

We designed the API health check specifically not to touch DynamoDB during each invocation. So it came as a surprise to learn that our ELB was encountering health check failures when DynamoDB couldn’t be reached. Potential reasons for this unexpected behavior ranged from nasty bugs to accidental features:

- Did we mistakenly blackhole traffic to/from our load balancer?

- Did we accidentally introduce a dependency on DynamoDB in our health check?

- Is this a feature or a bug? Don’t we want our load balancer to consider these instances unhealthy anyway?

This health check behavior also came with a critical side effect. In most cases, the ELB health check failures would occur faster than the DynamoDB timeout failures, and so our instances were terminating faster than DynamoDB error events could be ingested into our alerting and monitoring systems. This means we weren’t getting alerted when our database was unreachable!

Resolve

At this point, we’re ready to call end-of-impact and shift focus to getting to the bottom of this unexpected behavior. If your Gameday has other experiments to run, this would be a good time to write down notes and move on to the next experiment. Issues like the one we found should be taken offline and remediated so that we can run the same experiment again come next Gameday.

As it turns out, our DynamoDB timeouts were indirectly causing ELB HTTP health check failures throughthread exhaustion. Specifically, all API calls which depend on DynamoDB were spending so much time waiting for a connection that they occupied all available threads used by our HTTP servers. When this happens, any new requests (including health checks) get queued_indefinitely_until threads become available, which in our case is when calls to DynamoDB eventually timeout and free up.

Failing these database requests sooner should alleviate pressure on our servers, returning errors quickly so they show up as alerts as fast as possible instead of indirectly breaking health checks. But not all of Gremlin’s database operations fit this fail-fast requirement. We have larger database calls that run as batch jobs in background threads. These calls have a much higher cost when they fail and are completely independent of incoming HTTP requests, and so we want to give them as much time as reasonably possible to do their job. This means we need at least two profiles for our database timeouts.

The Java SDK for DynamoDB uses a default execution timeout of 30 seconds, which is an eternity when considering using DynamoDB as the persistence layer for an API server (this timeout is actually a bit longer than 30 seconds due to the SDK’s built-in backoff and retry algorithms). On the other hand, 30 seconds is reasonable for large queries or batch updates. We’ll gladly spend that time (especially in a background thread) if it means all of our data is processed. In both cases however, we set a very short _connection timeout _of 500ms. Whether we’re doing real-time or batch processing, we want to be alerted when connections start taking longer than a half-second to establish.

Looking ahead to the next Gameday

This experiment will be one of the first on the list to rerun during Gremlin’s next Gameday so that we avoid drifting back into this strange health check behavior. We’ll use the hypothesis we’ve written down along with the rest of our notes, and start experimenting again from the top. Gremlin’s Schedules feature as well as its REST API also allow us to make sure we run this experiment regularly, even when we’re not running Gamedays.

This is the first in a series of posts about running Gamedays at Gremlin. We have so much ground to cover on this topic (like “What happens to Gremlin users when we run Gamedays?”), not to mention much more to discover about our own systems as we break them. Send us your ideas for Gameday content and check back here soon for new posts!

If you have any questions about Gamedays or would like Gremlin to work with your team on a Gameday of your own, join the Chaos Engineering Slack and we’ll help you get started!

Happy Gameday!

Related

Avoid downtime. Use Gremlin to turn failure into resilience.

Gremlin empowers you to proactively root out failure before it causes downtime. See how you can harness chaos to build resilient systems by requesting a demo of Gremlin.

Get started