API gateways are a critical component of distributed systems and cloud-native deployments. They perform many important functions including request routing, caching, user authentication, rate limiting, and metrics collection. However, this means that any failures in your API gateway can put your entire deployment at risk. How confident are you that your gateway will be resilient to common production conditions such as backend outages, poor network performance, and sudden traffic surges?

In this post, we’ll present several methods for testing the resilience of deployments using API gateways with Chaos Engineering. We’ll look at common use cases, possible failure modes, and how you can build resilience to them.

What are API gateways?

An API gateway is a service that routes requests from clients to one or more backend systems based on a set of configuration rules. It’s a type of reverse proxy that can perform advanced functions such as authenticating users, enforcing rate limits, retrying requests, collecting metrics, and terminating TLS connections. This provides several benefits, including:

- Decoupling clients from services, eliminating the need to publicly expose services, applications, and other workloads directly to the Internet.

- Centralizing common functions and reducing duplicated functionality. For example, users can be authenticated once by the API gateway, instead of having to authenticate with each service separately.

- Reducing latency by caching responses or by load balancing requests. Several gateway solutions can also aggregate multiple requests into a single request, reducing network traffic and load on backend systems.

Some popular examples of gateways include Kong, Amazon API Gateway, Google API Gateway, Azure API Management, Anypoint Platform, and webMethods API Gateway.

How can API gateways become unreliable?

When integrating any new technology into our environment, we need to consider how it affects reliability. With API gateways, we need to ask:

- How reliable is the API gateway itself?

- How does the API gateway respond to failures in backend services?

For managed gateways like Amazon API Gateway, Google API Gateway, and Azure API Management, the reliability of the gateway depends on the service provider and their service level commitments.

However, we do have control over our backend services, their connection to the API gateway, and how the API gateway is configured. We can design our environments to detect and work around common failure modes by using techniques like load balancing, caching, and health checks. But without direct access to the API gateway, how can we be sure that our systems are properly configured to handle these scenarios?

With Chaos Engineering, we can proactively test conditions like unresponsive backend services and high network latency in a safe, controlled way. Chaos Engineering is the practice of deliberately experimenting on a system by injecting controlled harm, observing how the system responds, and using these observations to improve its resilience. By doing so, we can identify possible failure modes, fix them, and validate our changes before they can affect customers.

We’ll show some examples of how to test the resilience of your API gateways using Gremlin, the leading enterprise Chaos Engineering SaaS solution. We’ll use Gremlin to simulate failure conditions on backend services and explain how this helps validate the resiliency and recoverability of your API gateway.

Scenario 1: Validating load balancing and automatic failover

An essential task of an API gateway is to service requests to the right backend service based on the request’s parameters. But what if the service is unavailable? Will the request fail, will it timeout, or do you have a load balancing mechanism in place to reroute requests to a healthy instance?

Ideally, a gateway capable of load balancing would monitor the availability of backend services using health checks. For example, Kong can use two different types of health checks (active or passive) to determine which instances should receive traffic. To verify that this is working correctly, we can use Chaos Engineering to simulate an outage in one of our instances, send requests through the gateway, then monitor traffic to ensure the requests are being routed to a healthy instance.

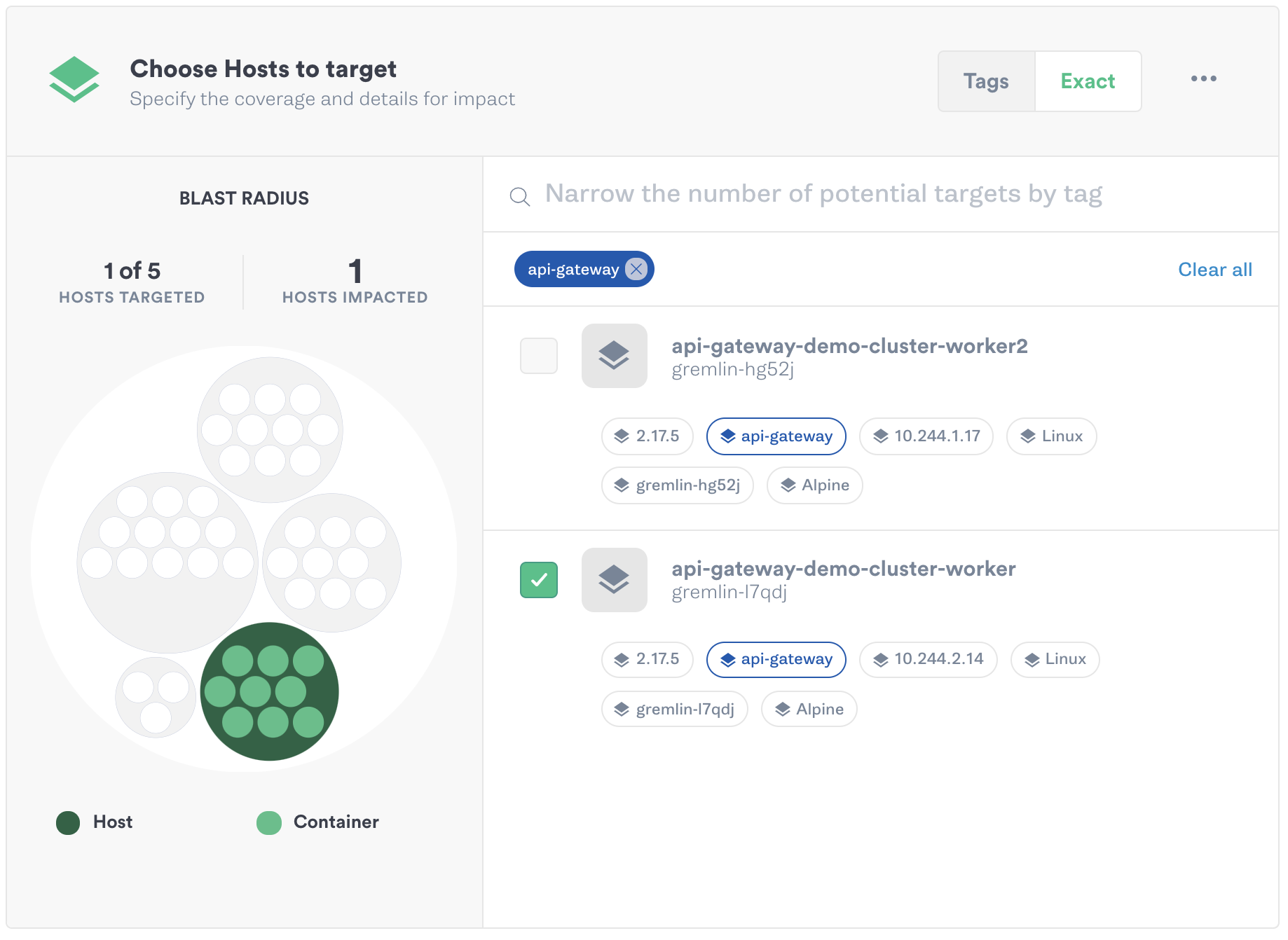

For example, say we have two instances (shown below as “workers”) running behind our gateway. In Gremlin, we can use a blackhole attack to drop all network traffic between our API gateway and our workers. In the following screenshot, we’ve selected one host to run this attack on. This is our blast radius, which is the number of resources in our environment impacted by the attack:

Once we start the attack, we can use a load testing tool like Apache Bench or JMeter to simulate user traffic to the gateway. If any requests fail because the gateway can’t contact the backend, then we know we need to reconfigure our health checks. In Kong, we can reduce the interval between checks, reduce the number of failed requests needed to mark an instance as unhealthy, or lower the request/response timeout. We can then update our API gateway configuration, restart the attack, then re-run our test to see if we can failover faster.

Once we’re confident that we’ve got a good load balancing strategy in place, we can do additional testing by running different attacks, such as:

- Using a latency attack to add delay to network calls, simulating poor network conditions.

- Using a CPU attack to oversaturate a system and replicate periods of high traffic.

- Using a packet loss attack to drop or corrupt a percentage of network traffic, simulating a faulty network switch or invalid routing tables.

Scenario 2: Validating caching

Using an API gateway to cache responses is an effective way of reducing load on your backend systems and improving response times, especially for frequent and consistent requests. Caching can also give us a layer of protection in case a backend service becomes unavailable.

A common way to validate a cache’s effectiveness is by sending requests and recording cache hit (response retrieved from cache) and cache miss (response retrieved from the backend service) metrics. For example, Amazon API Gateway reports these to Amazon CloudWatch as CacheHitCount and CacheMissCount. We can also perform a real-world test by using Chaos Engineering to recreate a backend outage and test the effectiveness of our cache.

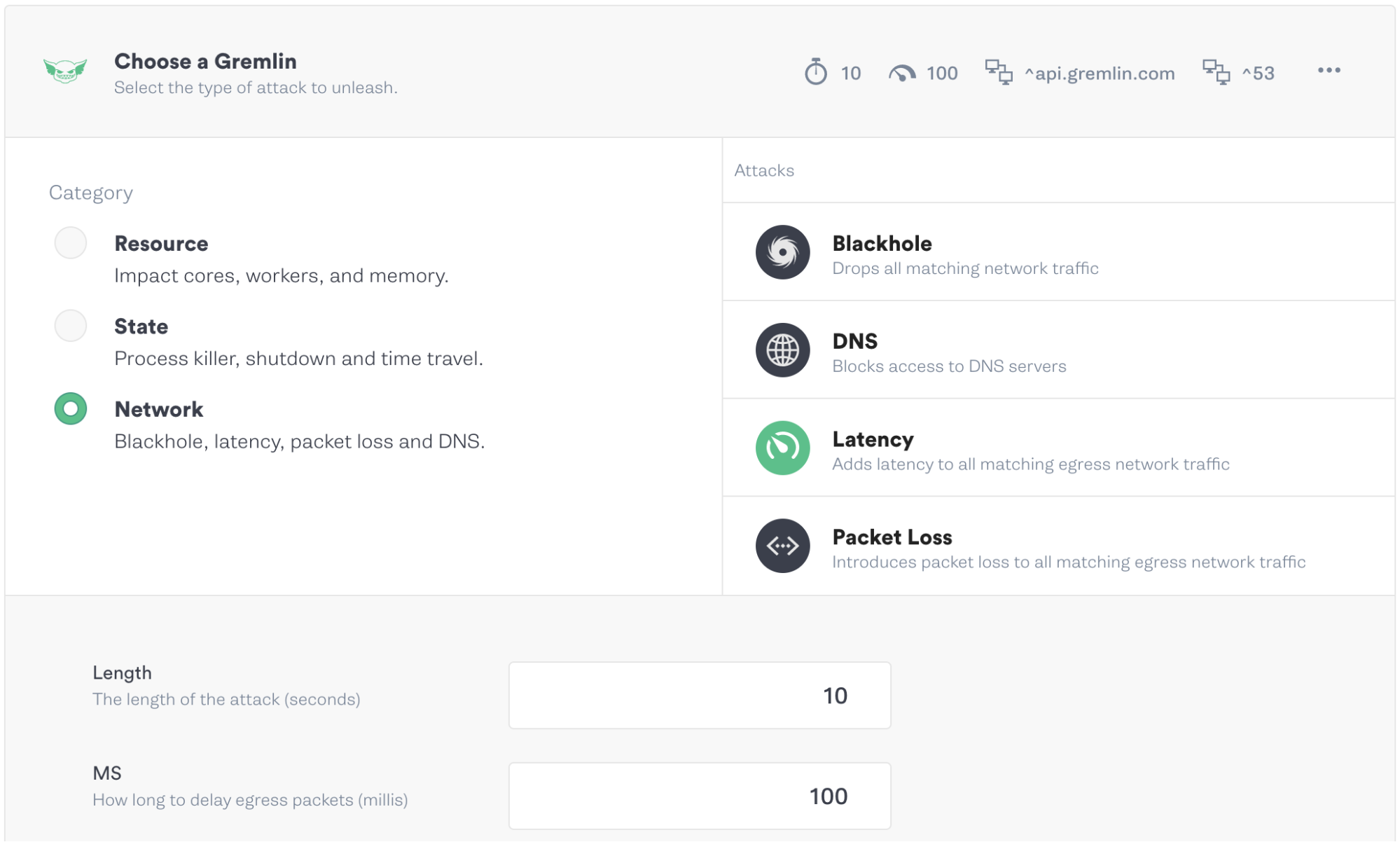

As with a load balancing test, we could use a blackhole attack to create a full outage. Instead, we’ll use a latency attack to simulate a degraded connection. A latency attack adds a set amount of delay to all outgoing packets. For instance, if we run a latency attack with 100ms of latency, any requests sent from the gateway to the target instance will take 100ms longer to return.

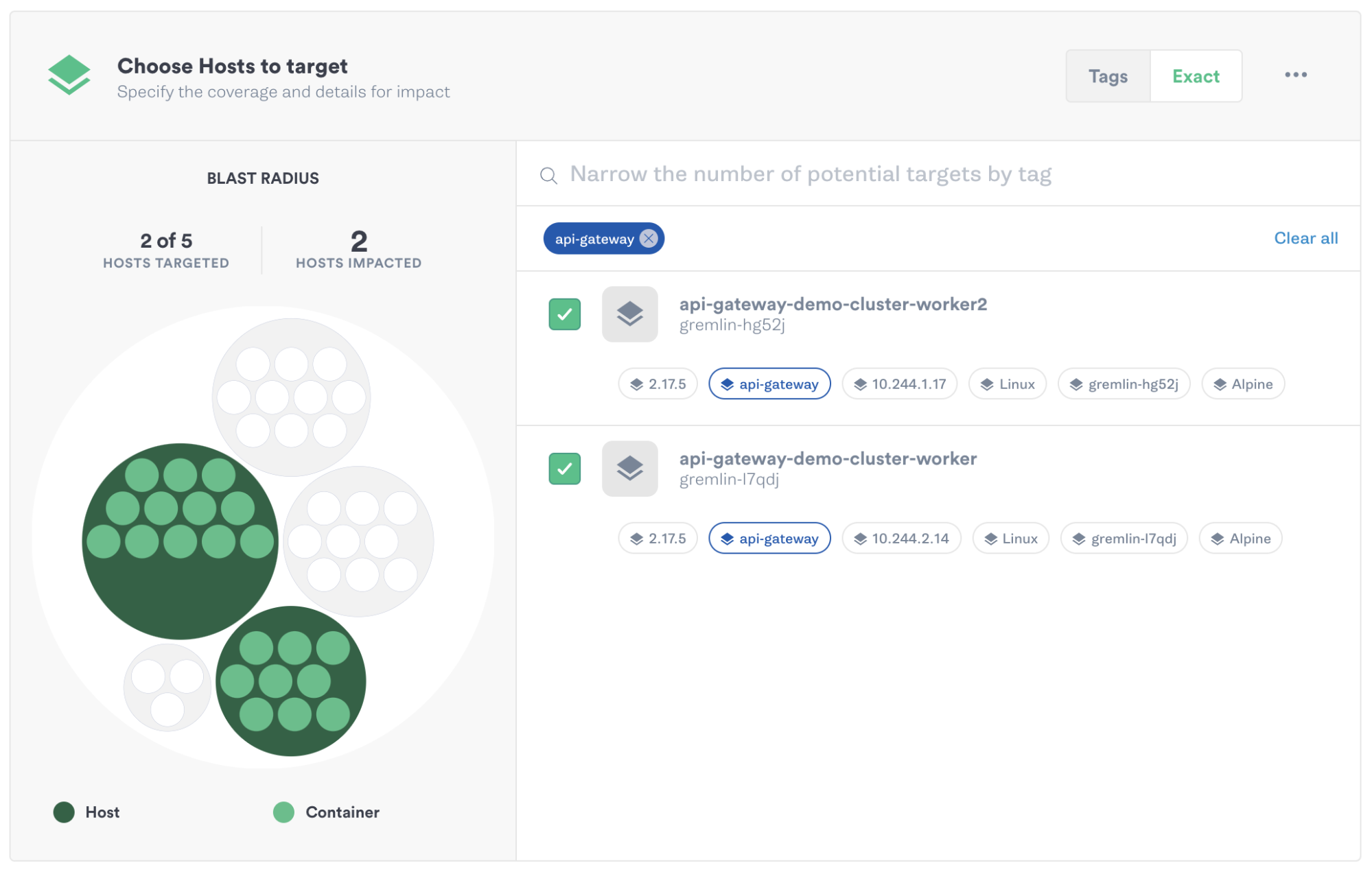

First, we’ll select both of our backend instances:

Next, we’ll select a latency attack and increase the magnitude (the severity of the attack) to 500ms:

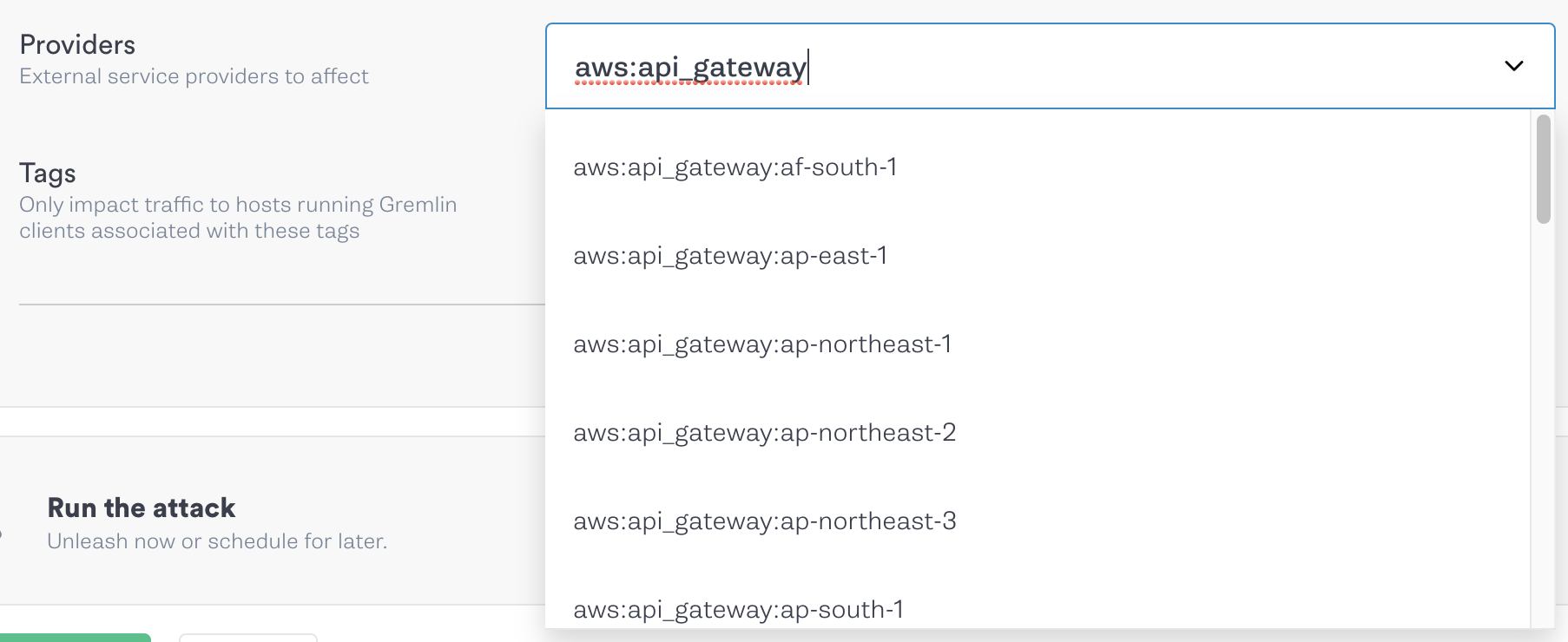

We can also limit the scope of the attack to API Gateway traffic by typing aws:api_gateway into the Providers box, then selecting the region that the API Gateway control plane is running in. This prevents Gremlin from adding latency to other types of network traffic.

Now we can run the attack and send requests to our API gateway. If we configured it correctly, the first response should take at least 500ms longer than normal, but each subsequent response should be much faster as the gateway pulls from its cache, bypassing the backend service entirely. If not, we know we need to tweak our cache settings.

Scenario 3: Testing the API gateway itself

For API gateways that operate within our infrastructure, our reliability efforts now include making sure that the gateway itself is resilient. This means finding ways that the gateway can fail, and taking proactive steps to prevent these failures from causing outages.

As an example, let’s look at Kong Gateway, which we'll deploy to a Kubernetes cluster. Once it’s deployed, we’ll see two new pods appear in our cluster: a proxy, and an ingress controller. What happens if either of these pods fails? Will Kubernetes detect the failure and reschedule the pod, or will we be left without a working gateway?

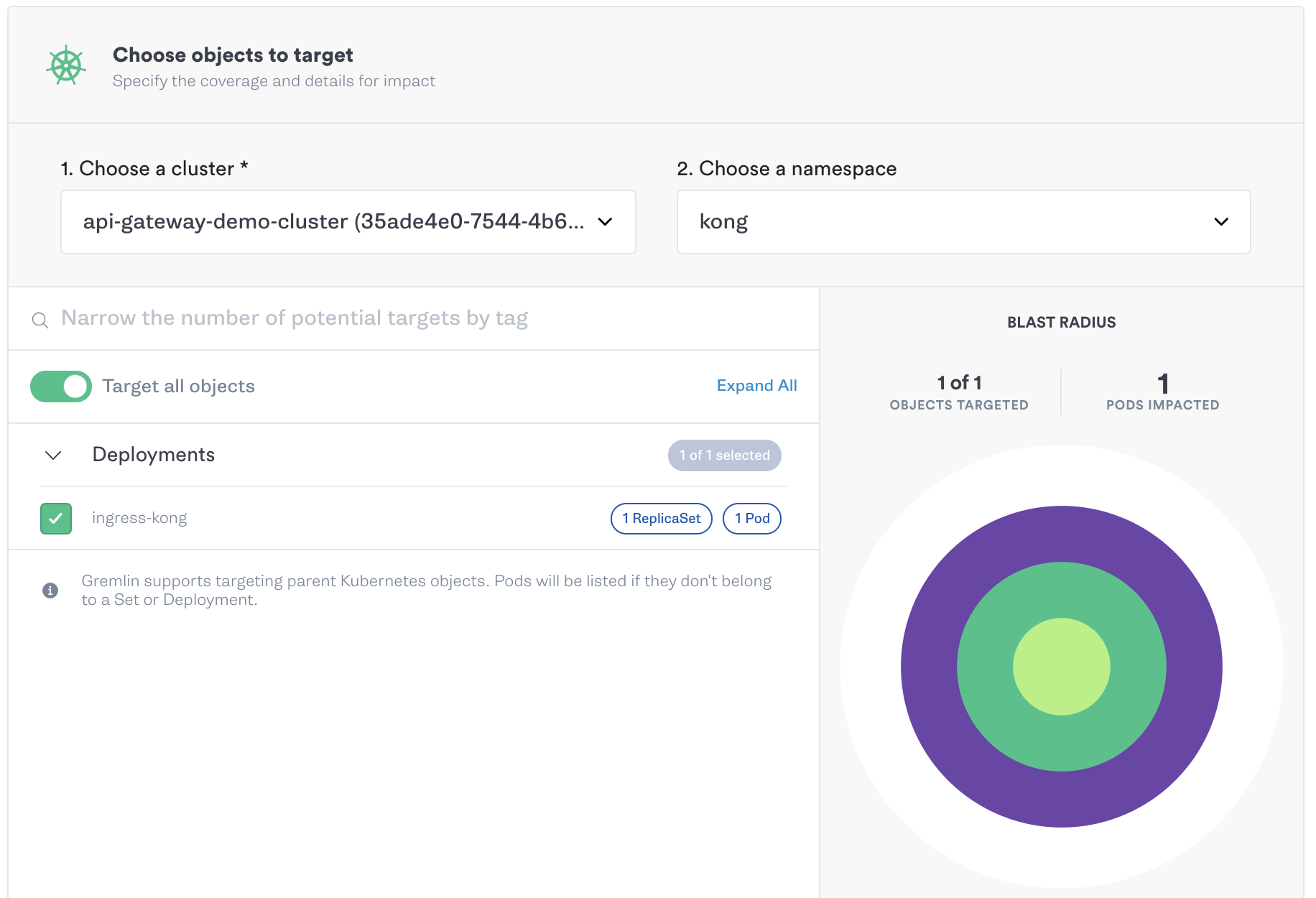

To test this, we’ll use a shutdown attack to terminate our Kong proxy pod, then monitor our cluster to see if Kubernetes restarts the pod. In Gremlin, we can target a specific pod by filtering to the kong namespace and selecting the ingress-kong deployment:

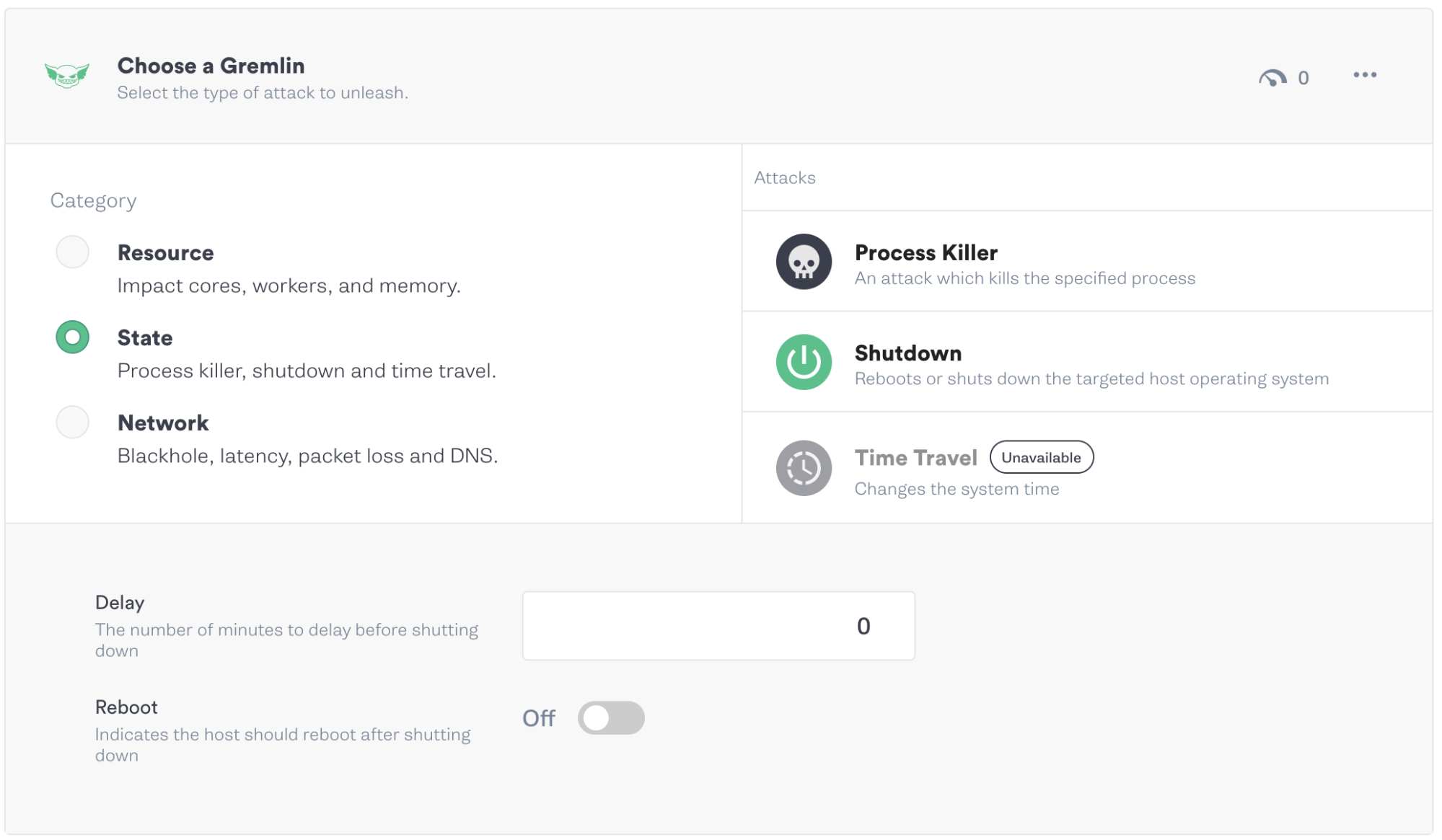

Next, we’ll select the shutdown attack type, set the Delay to 0 to run the attack immediately, and uncheck Reboot. Then, we’ll click Unleash Gremlin to run the attack.

Now if we query Kubernetes, we’ll see that the Kong ingress pod restarted once, but is in the Running stage. This tells us that Kubernetes was able to recover successfully and keep Kong running despite a critical outage.

1kubectl get pods -n kong

1NAME READY STATUS RESTARTS AGE2ingress-kong-665f6857df-gx7xz 2/2 Running 1 46h

Learn how to make your Kubernetes clusters more reliable by reading The First 5 Chaos Experiments to Run on Kubernetes.

Scaling up your reliability practice

Running one-off attacks is a great way to get started with Chaos Engineering, but eventually you’ll want to build a practice of running frequent automated experiments. This helps you make sure your API remains reliable even as your configuration and backend services change.

Gremlin helps you do this with Scenarios, which allow you to chain together and schedule multiple attacks in succession. You can use Scenarios to run multiple latency, blackhole, and other attacks, while gradually increasing the blast radius and magnitude (the intensity) of each attack to simulate large-scale failures.

Scenarios also include Status Checks, which are automated checks that can periodically check on the health of your services. You can create a Status Check to test the availability and responsiveness of your environment through a monitoring tool, or by sending a request directly to your application. For example, when testing your API gateway’s cache, you can use Scenarios to run latency attacks with increasing amounts of latency, while running an ongoing status check to test your service’s response time. If the Status Check doesn’t receive a response in the amount of time you set, it will automatically halt the Scenario and return your systems to normal.

To see how Gremlin can help you build reliable APIs, contact us or try Gremlin for yourself by signing up for a free trial.