This article was originally published on May 5, 2022.

For many businesses, prioritizing reliability is an ongoing challenge. Building reliable systems and services is critical for growing revenue and customer trust, but other initiatives—like building new products and features—often take precedence since they provide a clearer and more immediate return. That's not to say reliability doesn't have clear value, but proving this value to business leaders can be tricky.

Site reliability engineers (SREs) understand the need for reliable systems better than anyone since they're directly managing those systems, responding to incidents, and fixing outages. Reliability often only becomes a priority for business leaders when it starts impacting revenue and customer experience, but by that point, damage has already been done to the brand. For businesses to take a proactive approach to reliability, leaders must understand the benefits of improved reliability and how they impact business-level metrics. This means demonstrating the positive relationship between reliable systems and key performance indicators (KPIs) like revenue growth, cost reduction, and increased customer satisfaction.

In this blog post, we'll look at several key reliability-centric metrics and KPIs that show reliability's positive impact on businesses.

Why is reliability important for businesses?

Reliability is how well we can trust a system to remain available, whether it's an application, a distributed service, a server running in a data center, or a process that employees follow like an incident response runbook. The more reliable a system is, the longer it can run unassisted before failing or requiring human intervention.

With online services in particular, reliability is an expectation. Modern customers don't just want consistently fast and stable access to online services; they demand it. Even short-term outages are immediately publicized through social networks and websites like Downdetector and can irreversibly harm a company's reputation in minutes. Plus, customers often have several options and are motivated to leave services that don't meet their reliability expectations, especially in highly competitive markets like e-commerce and SaaS.

The less reliable our systems are, the more we lose out in sales, brand recognition, and customer trust. For services that already compete on features and usability, reliability can become a key differentiator.

To understand the impact that reliability can have on a business, we need ways to measure reliability meaningfully and objectively. This is where metrics and KPIs come into play.

Quantifying reliability with metrics and key performance indicators (KPIs)

A key performance indicator (KPI) is a measurable value tracking the business' progress toward a specific goal or objective. A metric is a method of measuring something or the results obtained from a measurement. Metrics track progress towards a KPI, and how well you're meeting your KPIs indicates how well you're meeting your objectives. For example, suppose you're an online retailer, and your KPI is to increase sales by 20%. In that case, your metrics might include data points such as:

- Average order size

- Number of transactions

- Email conversion rate

- Shopping cart abandonment rate

To make reliability a business objective, we need a way to measure, track, and demonstrate the benefits of improved reliability to these data points. In addition, we need to be able to benchmark against previous efforts and industry peers to maintain consistent growth and a competitive advantage, respectively.

For this blog, we'll look at four metrics commonly used to measure reliability: uptime, Service Level Agreements (SLAs), mean time between failures (MTBF), and mean time to resolution (MTTR).

Uptime

Uptime is the amount of time that a system is available for use. It's typically measured as the percentage of time that a system is accessible by users over a given period or the percentage of user requests that the system successfully fulfilled over a given period. 100% uptime is ideal but isn't realistic due to the unpredictability of complex distributed systems. Instead, teams aim for high availability, which sets a high minimum uptime target that teams strive for. For example, Netflix promises 99.99% availability, which allows for less than five minutes of downtime per month.

Higher uptime targets are harder to achieve and maintain, but the benefit is your organization gains a reputation for being reliable, and customers can feel more confident putting their trust in you.

Service level agreements (SLAs)

A service level agreement (SLA) is a contract between an organization and its customers promising a minimum level of availability or uptime. If a business fails to meet its SLA(s), it may owe its customers discounts or reimbursements. For example, AWS provides full credit to customers of their Amazon EC2 service if availability falls below 95% for any given month. SLAs aren't necessary, but offering an SLA shows customers that you care enough about reliability and user satisfaction to provide financial compensation if you don't meet your targets.

Learn how to create and measure meaningful SLAs by reading our blog: Setting better SLOs using Google's Golden Signals.

Mean time between failures (MTBF)

Mean time between failures (MTBF) is the average time between system failures. This metric directly impacts uptime. A low MTBF means our systems often fail, which implies our engineers are deploying problematic code or aren't addressing the underlying causes of failure. It also means customers are more frequently impacted by incidents, and our operations teams are likely spending a lot of time managing these issues.

Mean time to resolution (MTTR)

Mean time to resolution (MTTR) is the average time to detect and fix problems. Unlike MTBF, we want a low MTTR since this means our engineers are addressing problems quickly. There are several ways we can accomplish this, including:

- Using monitoring and alerting solutions to quickly notify engineers of problems.

- Creating incident response playbooks to help engineers resolve problems faster.

- Automating our incident response process to reduce or even eliminate engineer intervention.

A high MTTR means our systems are down for an extended time, and our engineers struggle to troubleshoot and resolve issues. We can reduce our MTTR by proactively finding and addressing failure modes and preparing teams to respond to incidents.

Translating metrics to KPIs to business objectives

Metrics like uptime, SLAs, MTBF, and MTTR tell us the state of our systems in terms of reliability, but they don't tell us the value we get from being reliable. We still need to link them to our KPIs and business metrics.

For example, imagine you're an engineering manager at a SaaS company with aggressive year-over-year growth targets. Your SaaS product is the company's sole source of income, so customers must be able to access and use your service for the company to generate revenue. The more outages and downtime your service has, the more likely customers will stop using your product, request reimbursements, or churn, reducing the company's chances of reaching its growth targets.

With this context, we can create a KPI such as "our service must have a monthly uptime of 99.5%." This gives us a target but no real way to measure our compliance with that target. If we define uptime as "the number of minutes our service was accessible out of the total number of minutes in a month," this gives us a clear metric to measure using a monitoring or observability tool. We also have a way of directly tying this metric to our KPI since we know that our monthly uptime can never fall below 99.5% (or around 216 minutes of downtime). The longer our service is available, the more likely we are to meet our monthly uptime KPI, and since this KPI directly impacts sales, the company is more likely to reach its growth target. This also positively impacts our other metrics by increasing our MTBF and lowering our MTTR.

While business objectives are often unique to the organization, some are commonly shared. These include revenue and customer retention, which we'll explore next.

Lost revenue and added costs

The most immediate impact of an outage is lost revenue. This is especially true in e-commerce, where top e-commerce sites risk losing as much as $300,000 in sales for each minute of downtime. Outages also divert engineering resources from activities meant to generate revenue, such as feature development and performance optimization. In highly regulated industries such as finance and healthcare, outages can come with significant fines, lost trust, and even personal liability.

Customer attrition

Service providers (including SaaS, PaaS, and IaaS) must set a high reliability standard for their customers by offering reliable, performant, and quickly recoverable services. Akamai found that sites that went down experienced a permanent abandonment rate of 9%, and sites that performed slowly experienced a permanent abandonment rate of 28%. If customers can't trust the services they depend on to be operational, or if downtime impacts their ability to run their business, they'll move to a competitor.

A key indicator for customer attrition is Net Promoter Score (NPS), which measures customer satisfaction and loyalty. A high NPS indicates that customers are enthusiastic about a service, while a low NPS indicates that customers are unhappy with a service and may even dissuade potential customers. While many variables contribute to NPS, reliability has a direct and significant impact.

Reliability is good for customers, it's good for engineers because you're going to get paged less; and when you do get paged, hopefully, it's something that's urgent so you don't have to waste that executive decision making ability. And, it's good for the business because it improves revenue, it lowers churn, it does all those healthy things.

Where these KPIs fall short

Unfortunately, these KPIs share a common problem: you can't measure them without first having an incident. For most companies, tying a dollar value to an incident is only possible if you've already experienced and documented a similar incident. You might be lucky enough to find a post-mortem from a competitor where they describe a similar incident, but you can't always rely on these. The question remains: how can you determine the value of reliability when you can't quantify the risks without experiencing an incident?

One approach is to estimate the cost of a potential downtime by calculating your operating costs for a period of time, such as one hour. Extrapolate this cost over time to predict the cost of a complete outage, where no revenue can be generated. Realistically though, this isn't an accurate approach, and it doesn't offer any ideas on how to improve reliability to avoid the outage altogether. Teams need a way to proactively measure reliability benefits without first requiring an incident. Fortunately, there's a new approach designed specifically for this purpose—one that combines Chaos Engineering principles to test services and objectively measure their reliability. This approach is called Reliability Management.

How Reliability Management helps improve reliability

Reliability Management aims to answer common questions teams ask when measuring and prioritizing reliability, such as:

- How can I measure reliability clearly and objectively?

- How can I proactively test my systems and services without first having to have an incident or outage?

- How can I track my services' reliability over time and demonstrate the improvements I've made to the company?

Reliability Management builds on existing Chaos Engineering principles by helping teams evaluate their services using standardized tests, generate a reliability score that accurately measures each service's reliability, and automate testing so teams can stay on top of reliability concerns or changes.

Reliability Management is a standards-based approach to baseline, remediate, and automate the reliability of complex, distributed systems.

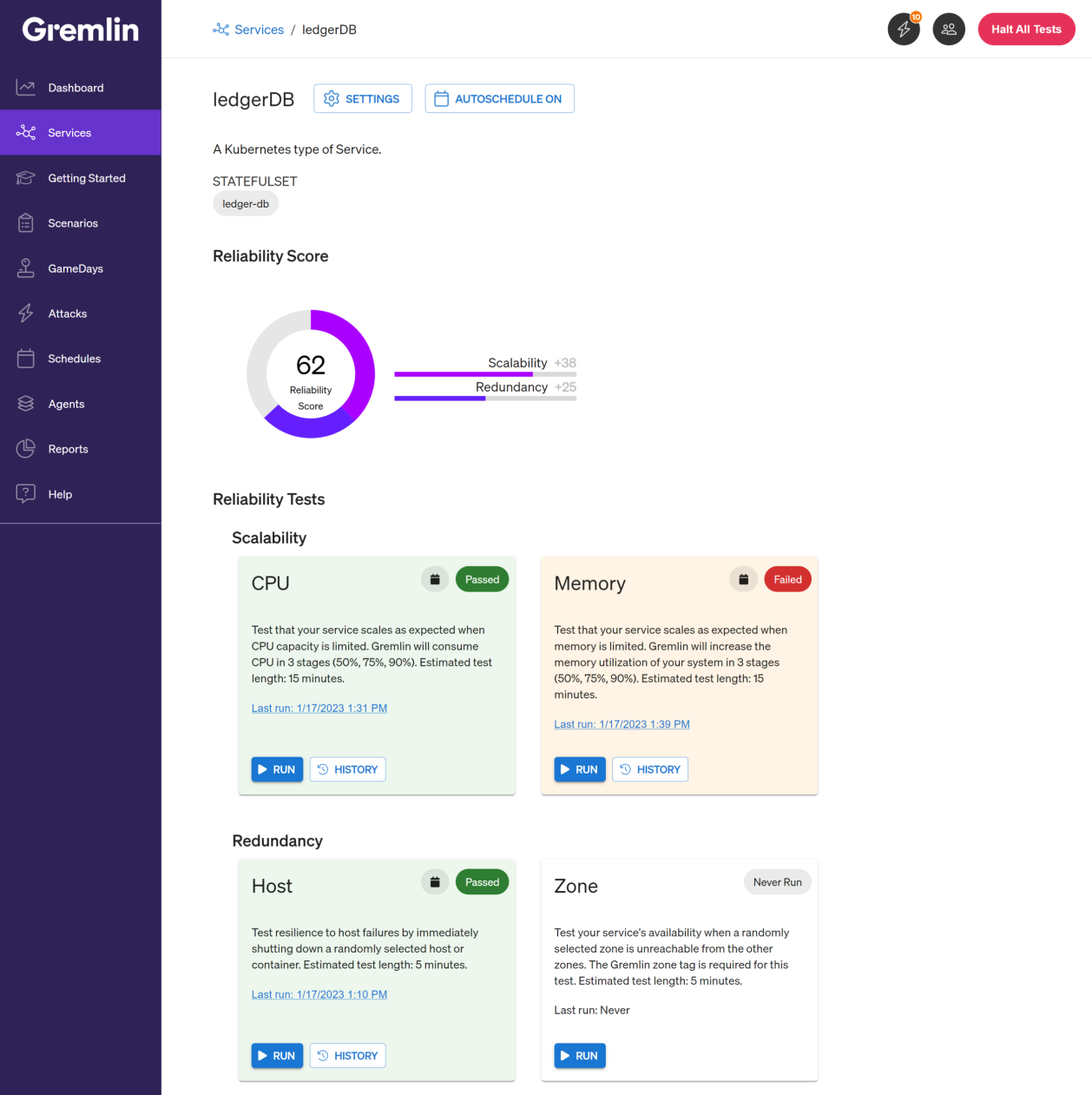

With Gremlin, you get a complete suite of pre-built reliability tests that you can run on any service in your environment in a matter of minutes. Running these tests on your services generates a score of that service's reliability, while providing detailed information on which components are resilient and which ones need additional work. For example, this service is resilient to host failures, but not limited memory scenarios:

This gives engineers clear direction on where to address reliability issues, while providing leadership with a clear and easily digestible measure of reliability.

How Chaos Engineering furthers reliability efforts

Reliability Management is built on Chaos Engineering, which is the practice of running tests on systems to discover and fix failure modes. Chaos Engineering is a practice for more advanced teams looking to run complex testing scenarios. These scenarios often replicate real-world scenarios such as data center outages, region failover, and cascading failures. This process of intentionally introducing failures into our systems is called "fault injection," and while it may seem counter-intuitive at first, exposing our systems to fault is the most effective way of improving their resilience.

One of the long-term benefits of [our Chaos Engineering practice] is it improved our MTTR and incident communications because we were able to practice without being in a live-fire scenario.

Chaos Engineering tests our assumptions about how our systems behave under certain conditions. For example, if one of our servers goes offline, can we successfully fail over to another server without our application crashing? With Chaos Engineering, we can perform a simulated outage on a specific server, observe the impact on our application, then safely halt and roll back the outage. We can then use our observations and insights to build resilience into the server and reduce the risk of real-world outages. By repeating this process, we can gradually build up the resilience of our entire deployment without putting our operations at risk. In addition, we can prepare our engineers to respond to these situations so that if they happen in production, they already have the training and muscle memory needed to respond quickly and effectively.

This effectively lets us:

- Take an in-depth look at our systems.

- Identify the different ways that they can fail.

- Address these failure modes by deploying fixes.

- Test our systems again to ensure they're no longer vulnerable to those failure modes.

- Create and promote a culture of reliability within our company.

Conclusion

The improvements that Reliability Management and Chaos Engineering bring reflect first in our low-level metrics, particularly uptime and MTBF. Finding and fixing failure modes helps increase availability and reduce the failure rate, which reduces the risk of missing our revenue targets, lowers our losses due to downtime, and improves customer satisfaction. Given how competitive online services are, companies that differentiate themselves on reliability more easily gain and retain customer trust and avoid costly incidents.

The unpredictable, the unknown, has just as much impact—if not more—on your business than the known. Being prepared for [unexpected events] and trying to find them is one of the most important things we can do.

Learn how Gremlin can help you improve reliability at your company. Start your free trial today.