Reliability testing is the process of projecting and testing a system’s probability of failure throughout the development lifecycle in order to plan for and reach a required level of reliability, target a decreasing number of failures prior to launch, and to target improvements after launch. That is a difficult mission, especially as systems increase in complexity. The purpose of reliability testing is not to achieve perfection, but to reach a level of reliability that is acceptable before releasing a software product into the hands of customers.

Testing can only detect the presence of errors, not their absence.

We’ll break down the different components of software reliability testing. We’ll discuss how to leverage software testing to create project plans and to achieve acceptable software quality levels using techniques steeped in the history of mechanical engineering. Finally, we’ll discuss how reliability testing is modernizing to fit today’s environments.

What is software reliability testing and how has it evolved?

Software reliability testing has its roots in reliability engineering, a statistical branch of systems engineering focused on designing and building systems that operate with minimal failure. It’s closely related to reliability and risk assessment processes such as Failure Mode and Effects Analysis (FMEA). When the practice began prior to World War II, it was used in mechanical engineering, where reliability was linked to repeatability. The expectation was that a reliable machine would produce an expected output given certain inputs consistently over a period of time. If the output varied, then the machine was considered unreliable.

This goal was the benchmark of designing and maintaining machines. Before a component of a machine could be considered ready for shipping, it had to meet a reliability standard of achieving a low enough failure rate based on a series of tests over an adequate amount of time. If the machine was upgraded or maintenance performed, the testing needed to be performed again to check that no new defects were introduced.

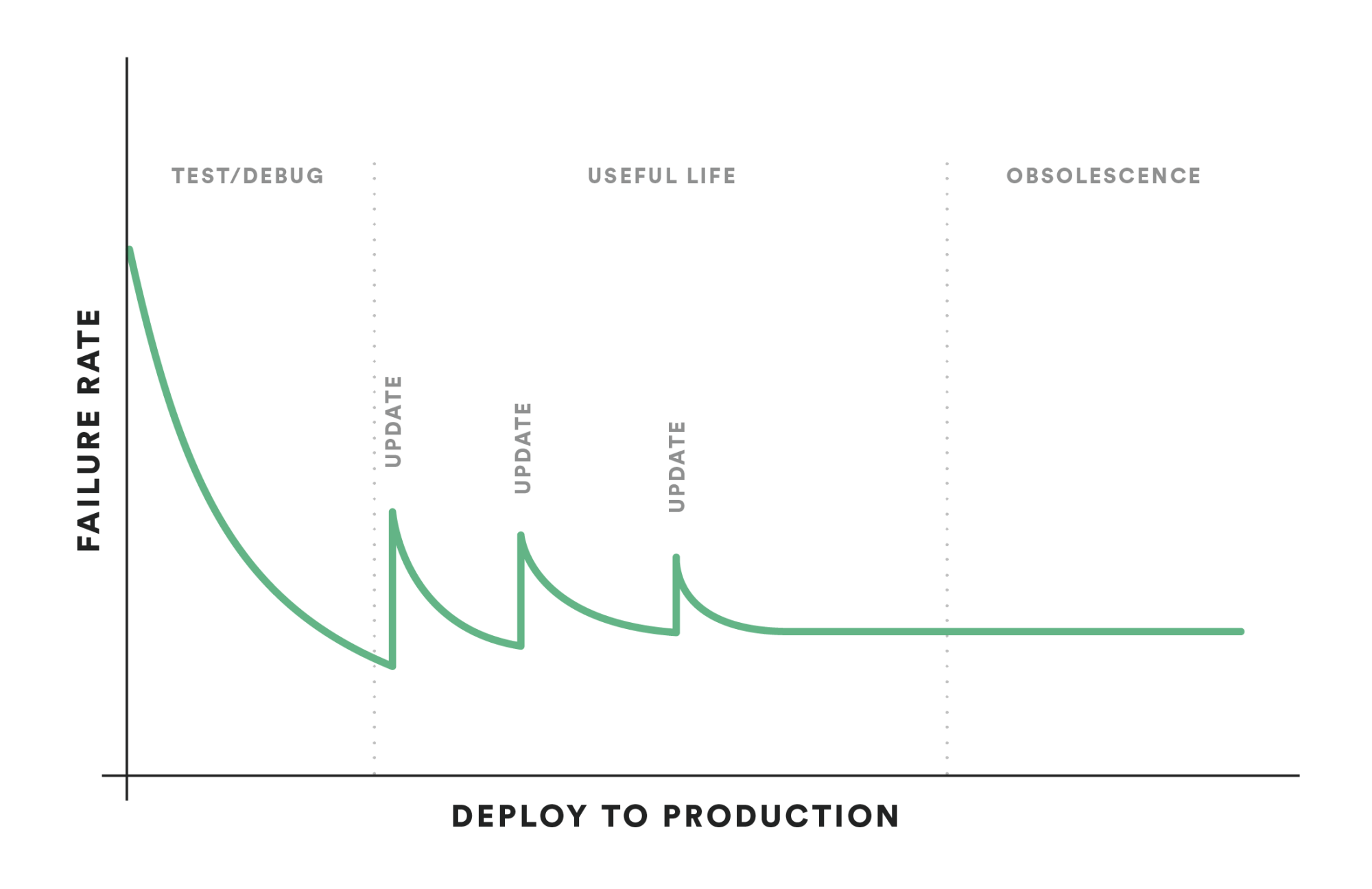

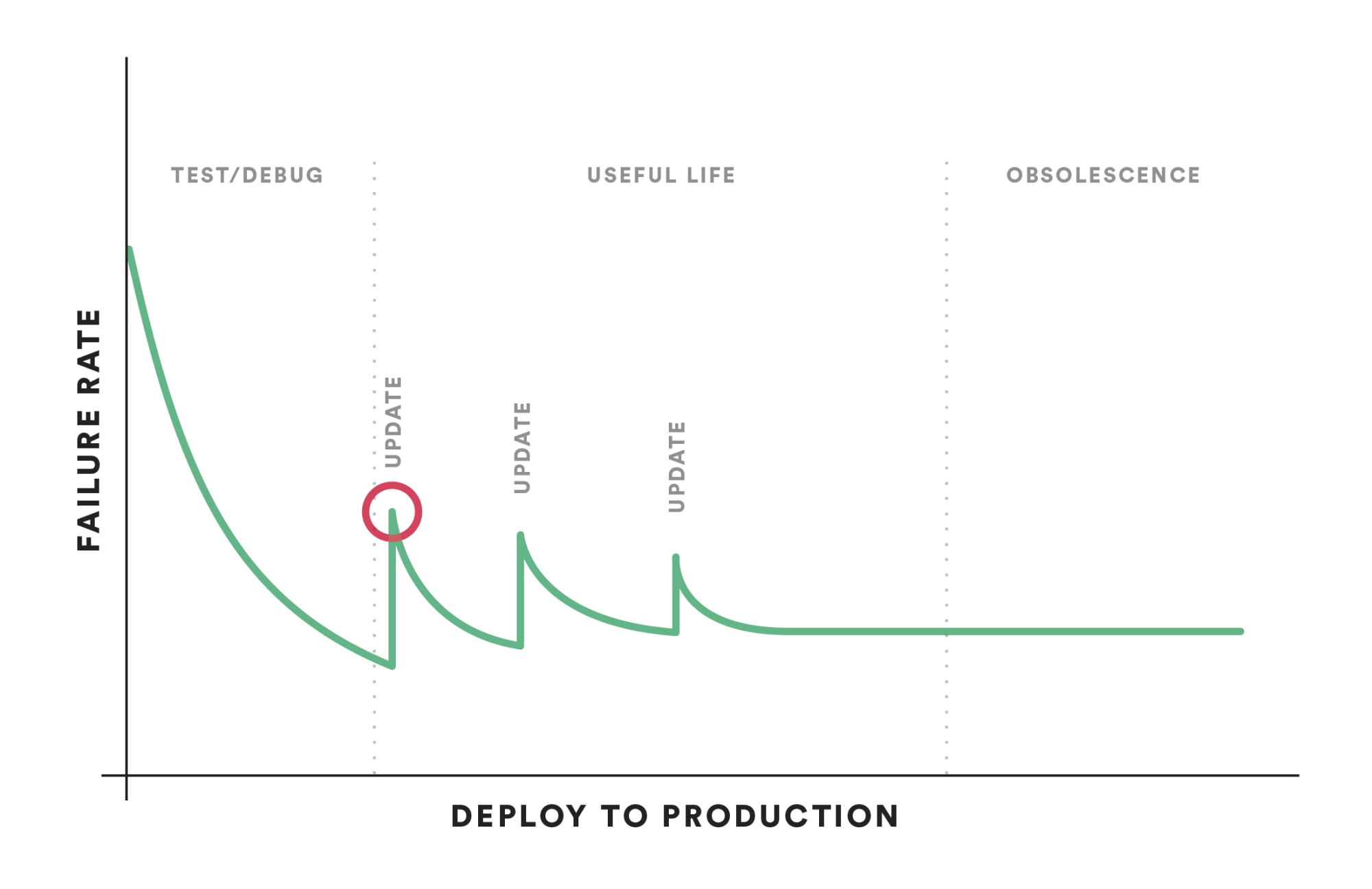

Software reliability testing is similar in principle. Although the testing software goes through is different and evolving, the goal is still to reach a level of failure rate that is low enough to meet predetermined standards prior to pushing code to production. Upfront failure rate projections are used by engineering teams to plan the cost of development. Teams need to ensure they have adequate test coverage to feel confident they are finding a high enough portion of bugs upfront. At some point, development hits diminishing returns where the incremental improvement is not worth the extra investment.

It’s important to start the software design phase with a reliability mindset and to perform testing early. Bugs are more expensive to fix later in the software development lifecycle (SDLC). This needs to be balanced with the time investment required to have adequate test coverage. Some bugs take time to manifest, such as buildups that cause memory leaks and buffer overflows, but it would be unreasonable to test for that long, and there are ways to accelerate testing that we’ll discuss later. While it’s important to test throughout your application, start by focusing your testing efforts on higher use functions, especially those that lie in your application’s critical path. During development, the rate of failure should continue to decline until a new feature is added, at which point the testing cycle repeats.

Why is reliability testing important?

Reliability testing is important for the planning and development processes to:

- Provide adequate budget for fixing bugs.

- Ensure that bugs are found as early as possible.

- Ensure that software meets reliability standards prior to launch.

Since there is no way to ensure that software is completely failure free, teams must take steps to find as many failures as possible, even those that can’t be fixed, so the risks can be weighed. Then, once adequate test coverage is performed, they can use the models to benchmark their reliability at each phase. This is critical because reliability can be directly tied to increased revenue, less time firefighting, lower customer churn, and improved employee morale.

Reliability testing serves two different purposes. For product and engineering teams, it provides feedback early and often for areas to improve, the errors introduced with new features, and for scoping the level of time and effort to reach launch. This helps isolate which code pushes caused a jump up in error rates.

For product management and leadership, it provides a statistical framework for planning out reliability development and for benchmarking a team’s progress over time. It also provides a check for when development teams have reached a level of diminishing returns and the risk levels are known and weighed against the costs of mitigating failures.

How do we measure the results of reliability testing?

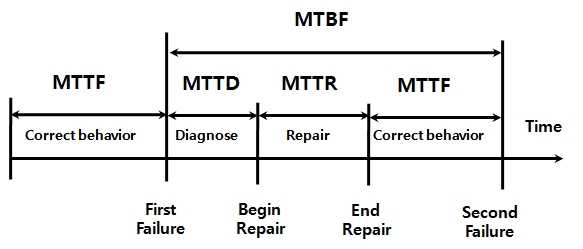

As mentioned earlier, we need to accelerate the testing process compared to real-world failure rate findings. The standard metric to track and improve is Mean Time Between Failure (MTBF) which is measured as the sum of Mean Time to Failure (MTTF), Mean Time to Detection (MTTD), and Mean Time to Repair (MTTR), where MTTF is the time between one repair to the beginning of the next failure, MTTD is the time from when a failure occurred to when it is detected, and MTTR is the time from when a failure is detected to when the failure is fixed. In testing this needs to be accelerated, so the MTBF is relative, not absolute.

In Software as a Service (SaaS), failure is often defined as incorrect outputs or bad responses (for example, HTTP 400 or 500 errors). However, it’s important to include any failures that are meaningful to our use case, such as too much latency in e-commerce.

While designing the tests to use, we ensure that test coverage is adequate and that the balance of testing is on the critical code/services, as mentioned before. Test coverage is defined as the amount of code that is executed during testing over the total code in a system, and the generally accepted rule is that test coverage should exceed 80%. This reduces the risk of an unexpected defect emerging in production.

What are the types of reliability testing?

Software reliability testing needs to uncover failure rates that mimic real-world usage in an accelerated time period. Proper testing procedures follow proper statistical and scientific methods.

- Begin with planning the tests.

- Set the failure rate objective before running any tests.

- Include the hypothesis for the results.

- Develop features and patches.

- Perform the tests.

- Track and model the failure rate.

- Repeat steps 3-5 until objectives from step 2 are met.

Test plans should only be updated methodically, or if they deviate too far the comparison to previous rates will be comparing apples to oranges. It is, however, prudent to make small adjustments as the software is developed based on user feedback and user testing. Initial, hypothetical transaction paths, for example, can be updated with actual user paths.

There are several different kinds of testing performed to find error rates. For many teams, it's a combination of using traditional Quality Assurance (QA) tools supplemented by modern testing tools.

Feature testing

Feature testing is the process of testing a feature end-to-end to ensure that it works as designed. This comes after the unit and functional testing has been completed. Test cases can be performed with alpha testing, beta testing, A/B testing, and canary testing. Usage is typically limited to focus on just the feature in question. Tools like LaunchDarkly and Optimizely are used to create feature flags and test groups so testing can be limited and easily rolled back if too many errors are found.

Load testing

Load testing is used in performance testing and is the process of testing services under maximum load. Software is used to simulate the maximum number of expected users plus some additional margin to see how an application handles peak traffic. Measuring error rates and latency during load testing helps ensure that performance is maintained. Open source tools like JMeter and Selenium are often used in load testing.

Regression testing

Regression tests are not specific tests, but rather the practice of repeating or creating tests that replicate bugs that were fixed previously. The idea being that new features or other patches should not reintroduce bugs, so it’s important to consistently test for old and new bugs. Regression testing can be performed more periodically than the previous tests to prevent tests from ballooning and take longer than the specified period designated for testing.

How can Chaos Engineering be applied to reliability testing?

In modern, distributed architectures, it’s important to perform Chaos Engineering to build confidence in reliability. Chaos Engineering is the practice of methodically injecting common failure modes into a system to see how it behaves. Think of it as accelerated life testing (ALT) for software. Instead of using stress testing like a heated room to see how a machine handles wear and tear, we spike resources or inject networking issues to see how a system handles an inevitable memory overflow or lost dependency that wouldn’t occur if just testing in cleanroom conditions.

Chaos Engineering complements the previous three testing methodologies to provide more holistic testing. In feature testing, a portion of the testing group performs their testing while chaos experiments are simulating common failures and compares their results to the control group without the failure modes. The best process for load testing is to test systems under load in ideal environmental conditions, test failover and fallback mechanisms using Chaos Engineering without load, then retest our system under load and while a node drops out or database connection has added latency. In these different tests, the end user, whether a beta tester or load generator, should not see a spike in error rates or latency. If we find the cause of failure using Chaos Engineering, such as our system failing to failover to another database in the event of a node outage, we can perform regression testing to ensure it doesn’t happen again.

What are the testing processes?

Tests follow these techniques and processes.

- Test-retest reliability: Using the same testers, retest our system a few hours or days later. There should be a highly correlated result, regardless of it’s a human or load test. The tricky part is that modern systems often shift and change regardless of if any new code is pushed.

- Parallel or alternative reliability: If two different forms of testing or users follow the same path at the same time, their results should be the same. In other words, if our load test follows our beta tester’s path, the result should be the same.

- Inter-rater reliability: Two different, independent raters should provide similar results. This tests for scoring validation. If we bring in another QA engineer, their scoring of a feature’s functionality from tests should not differ greatly from the original QA engineer.

How do we model test results?

Modern software is complex, and therefore modeling the reliability of modern software is equally complex. It is impossible to design the perfect model for every situation, and over 225 models have been developed to date. Pick the one that works best for the software being tested. There are three types of models, prediction, estimation, and actual models.

Prediction modeling uses historical data from other development cycles to predict the failure rate of new software over time. A few examples of prediction models include:

- Musa Model: The number of machine instructions, not including data declarations and reused debugged instructions, and multiplies that by a failure rate between one and ten at a decreasing rate over time.

- Rayleigh Model: This model assumes bug patching is constant and that more bug discoveries earlier means fewer bugs discovered later. Visually, this means higher peaks result in faster declines in failure rates. It covers the entire software development lifecycle, including design, unit testing, integration testing, system testing, and real-world failure rates.

Estimation models take historical data, similar to prediction models, and combines it with actual data. That way models are updated and compared to the current stage of development. The Weibull and exponential models are the most common. These models are mapped using least squares estimates (LSE) and maximum likelihood estimations (MLE) to best align the curves with the actual data provided.

Actual or field models are simply taking real user failure rates and recording them over time. These can be compared to the prediction and estimation models used previously to track progress and reliability progress compared to plans.

If we’re using agile methodologies, we can either track each sprint or combine multiple related sprints into a single model.

What software is used to track reliability testing?

Many of the models mentioned above can be calculated by hand using statistical software, such as SAS. There are also off the shelf options, such as CASRE (Computer Aided Software Reliability Estimation Tool), SOFTREL, SoRel (Software Reliability Analysis and Prediction), WEIBULL++, and more. These tools will provide different models to help with prediction or estimations.

Who should perform software reliability testing?

Software reliability testing has been around for decades, yet the concepts and models are still relevant today. QA and SRE teams are often tasked with the reliability testing, and reports of the testing are used by engineering managers. However, with the shift left movement, some of this testing can be done by developers to not only test for reliability, but also to create a reliability mindset, where designs and development include resiliency measures.

Using reliability testing helps with planning, design, and development to help map out costs and benchmark to reliability standards. Reliability testing needs to continue to evolve to change with new architectures, like distributed systems. The result is more performant and robust software and an improved customer experience.