The COVID-19 pandemic has created a state of uncertainty, and many organizations are turning to cost-saving measures as a precaution. In a survey by PwC, 74% of CFOs expect a significant impact on their operations and liquidity. As a result, many organizations are looking to reduce costs wherever possible, and this includes cloud computing.

As cloud computing becomes a more central focus of businesses, so too is optimizing cloud use for costs. On average, more than half of enterprises surveyed by RightScale spend over $1.2 million annually on public cloud platforms, and the top priority for 64% of these organizations is optimizing their existing cloud costs. At the same time, the world is arguably more reliant on cloud computing and online services now than ever before. Making changes to your cloud infrastructure may seem like a risk unless you have a way to verify the impact that your cost-saving measures will have.

In this article, we’ll explore several cost optimization measures that you can apply to your cloud infrastructure. We’ll focus on Amazon Web Services, specifically Elastic Compute Cloud (EC2). Besides being one of the oldest AWS services and a cornerstone of the AWS platform, it’s also one of the most complex and feature-rich. Over time, organizations can accumulate unnecessary infrastructure and hidden costs, such as:

- More expensive instance types (e.g. on-demand or reserved instances vs. Spot Instances)

- Underutilized instance capacity

- Instances that are stopped, but not terminated

- Unused Elastic Load Balancing (ELB) load balancers

- Orphaned EBS volumes and snapshots

- Unattached reserve Elastic IP addresses

Cost optimization is so important to AWS that it’s one of the pillars of the AWS Well-Architected Framework. However, finding extraneous costs isn’t always easy. For all we know, that one t2.small instance that an ex-employee spun up two years ago is hosting an essential service. Before we can start paring down resources, we need to understand what the impact will be to our applications. We can accomplish this with Chaos Engineering.

Chaos Engineering is the process of deliberately experimenting on a system by injecting measured amounts of harm, with the goal of ultimately improving the system’s reliability. In addition to this, we can use Chaos Engineering to help optimize our EC2 costs by proactively testing the impact of cost-optimization measures. This way, we can confidently reduce our spending without impacting our customers.

We’ll look at three approaches to reducing our AWS costs through Chaos Engineering:

- Moving from on-demand or reserved instances to Spot Instances

- Optimizing the size of our instances by using smaller instance types

- Finding and terminating unused instances

Moving from on-demand to Spot Instances

By default, new EC2 instances are created as on-demand instances. This means that we’re charged a certain hourly rate (per-second for Linux instances) for as long as the instance is running. On-demand instances are useful for running stateful workloads without making a long-term commitment, but if your workloads are stateless or can tolerate shorter run cycles, there’s a more cost-effective instance type called a Spot Instance.

A Spot Instance is essentially leftover EC2 capacity that can be used to run temporary instances. Pricing for Spot Instances is significantly lower (as high as 90%) than on-demand prices, making them a much less expensive alternative even compared to reserved instances (RIs). And while RIs present large upfront costs, Spot Instances follow the same on-demand pricing model as on-demand instances.

The drawback is that EC2 can shut down your Spot Instance at any time if it needs to reclaim the computing resources. AWS provides a two-minute warning from the time the instance is reclaimed to when it’s stopped, giving you time to safely stop your workloads and transfer data off-instance. Spot prices also change based on demand, so your actual costs may vary over time.

Spot Instances are useful for running workloads that are stateless or can tolerate failure, such as web proxies, CI/CD servers, and even Kubernetes nodes. But before we start transitioning workloads to Spot Instances, we should make sure that they can tolerate a sudden shutdown request without an interruption in service or data loss.

Verifying Spot Instance compatibility with Chaos Engineering

Assuming we have an application running in an on-demand instance, we can run an experiment to test its failure tolerance by restarting the instance. An experiment like this works best with workloads that are already distributed or replicated, such as load-balanced web servers.

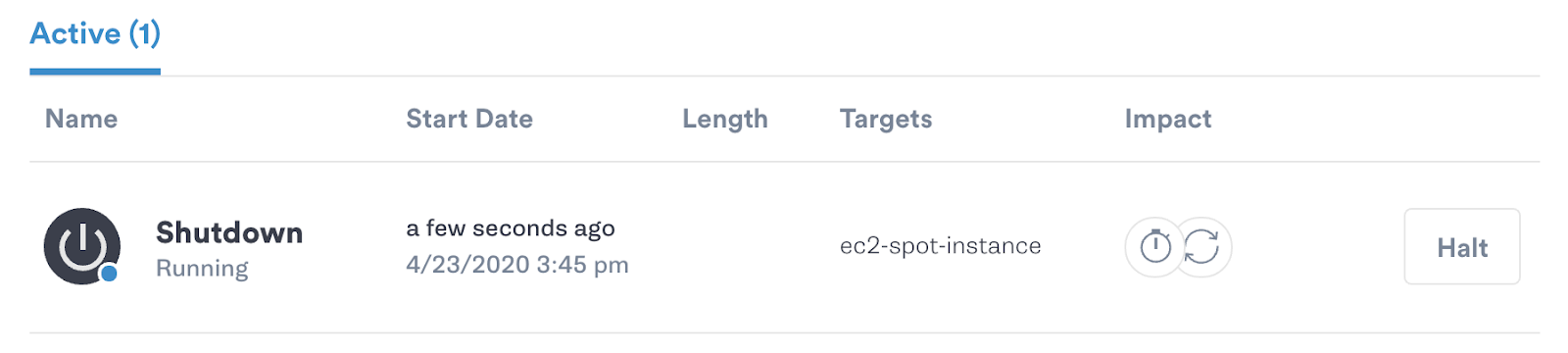

Using Gremlin, we select our target instance and execute a shutdown attack to simulate a termination request sent by EC2. We then observe our application to see if there was a noticeable impact. If the application continues functioning normally, we can infer that Spot Instances are a viable option and might consider creating a Spot Fleet to maintain a certain level of capacity.

Reducing instance capacity and utilizing horizontal scaling

AWS EC2 offers an enormous range of instance families and instance types, from tiny t1 instances to enterprise-scale x1 instances. Instance types vary on several factors including CPU architecture, CPU and RAM capacity, storage device throughput, and of course price. Choosing the optimal instance type for your workloads can create significant cost savings.

A common argument for buying oversized instances is to provide a buffer in case of unexpected spikes. While you should maintain a small buffer, there are more cost-effective ways to handle spikes, such as:

- Using EC2 Auto Scaling to dynamically add and remove instances in response to demand

- Using Burstable Performance Instances to exceed your instance’s CPU capacity for short bursts as needed

Regardless of the method used, we want to make sure that we can respond to increased demand quickly without paying for resources that will sit idle during downtime. We can test this process using Chaos Engineering.

Testing automatic scaling with Chaos Engineering

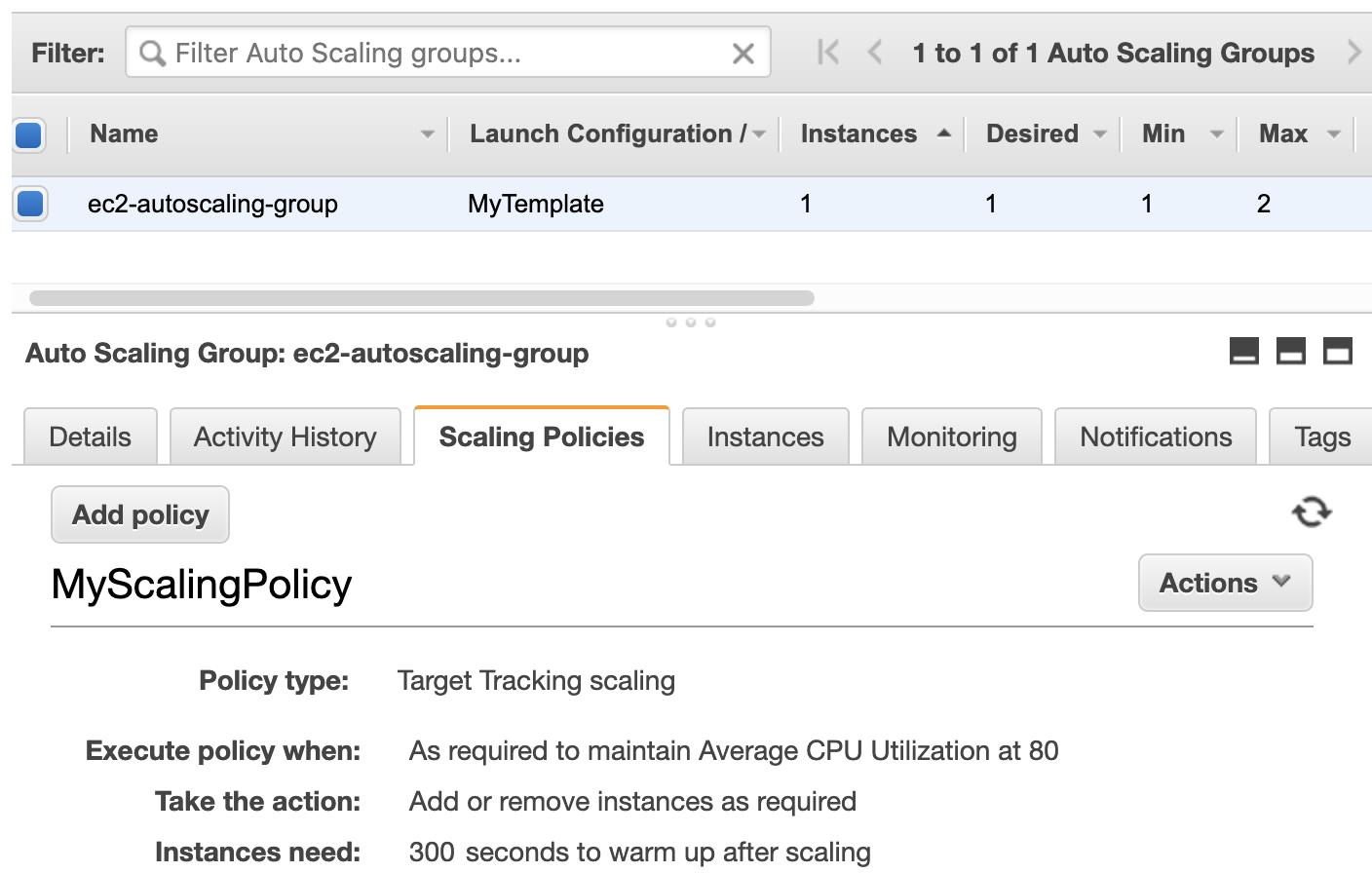

Let’s say we have an EC2 Auto Scaling group with dynamic scaling enabled. We have a scaling policy in place to add a new instance when CPU usage exceeds 80% across the entire group, as shown in the following screenshot. When CPU usage drops to a safe level, we automatically terminate instances to reduce our costs.

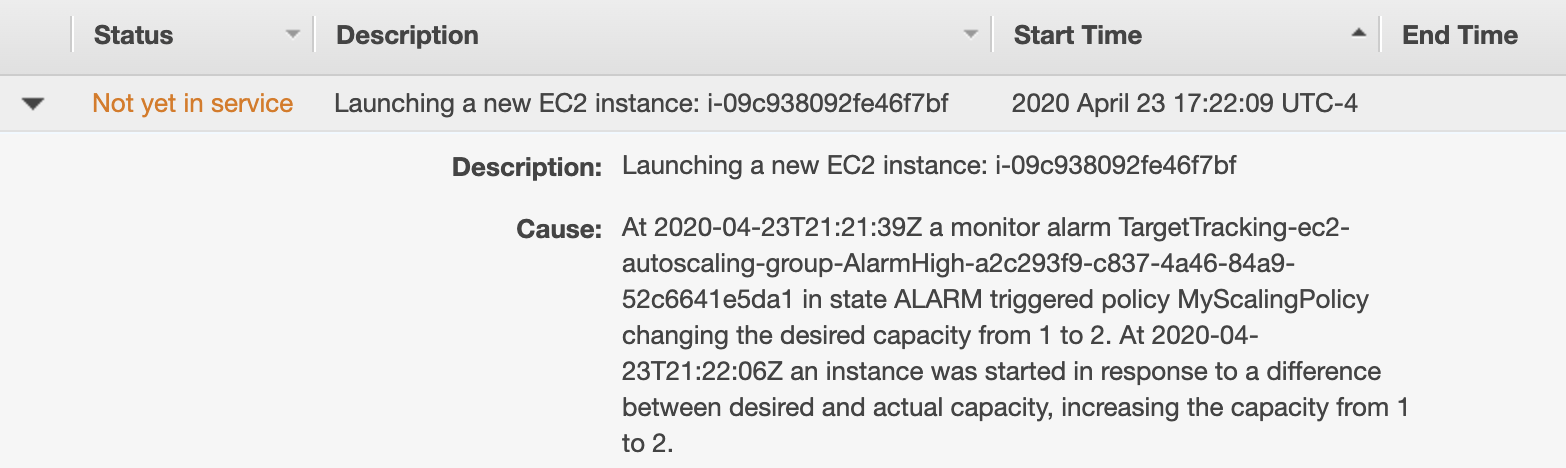

We can test this by using Gremlin to run a CPU attack. We’ll target our EC2 instance, execute a CPU attack that utilizes 80% of CPU capacity, then monitor the Auto Scaling group in the AWS console. We should see EC2 provision a new node almost immediately:

When the attack stops, we’ll see EC2 automatically terminate the newly provisioned instance. This shows us that instead of paying for excessive capacity per-node, we can reduce node capacity and use Auto Scaling groups to dynamically scale our workloads up or down as necessary.

Identifying non-essential infrastructure

Over time, organizations tend to accumulate infrastructure that’s no longer necessary, but still costs money. Maybe an employee had spun up an instance for testing and forgot to terminate it, or migrated an application to another instance and never documented it. Maybe a configuration management script left behind artifacts, and nobody on the team knows how they got there.

The risk is that some of these instances might actually provide essential services, so simply shutting them down or terminating them could cause an outage. What we can do instead is use Chaos Engineering to test their essentiality by slowing or blocking network traffic to the instances in question and observe the impact on our applications.

Testing instance essentiality with Chaos Engineering

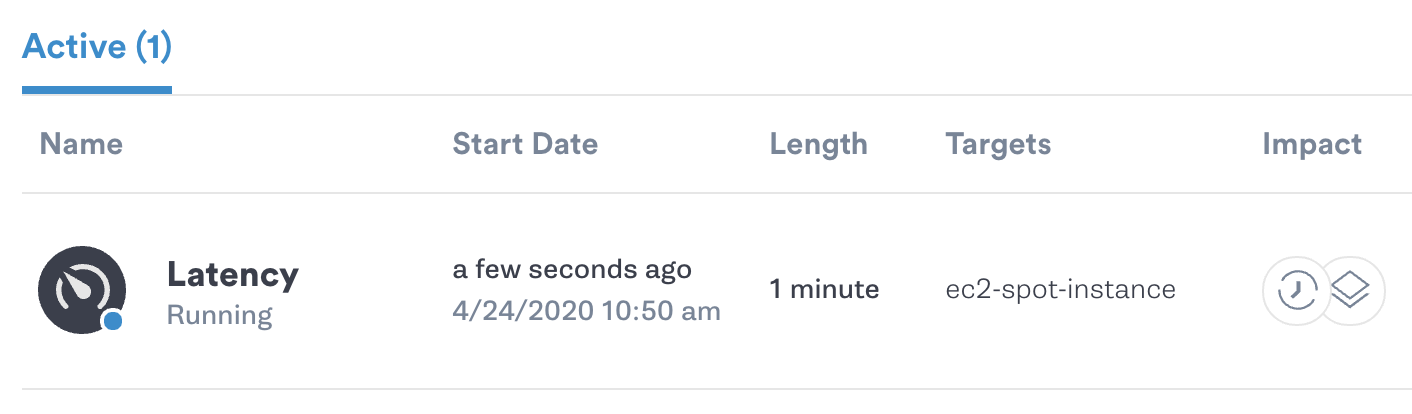

If an instance is providing necessary functionality to our application, then limiting traffic to it should have an immediate and noticeable impact. With Gremlin, we can use a latency attack to slow down network requests to the instance in question and observe the impact that this has on user requests:

Here, it helps to have a monitoring solution in place that can time requests to our application. If we notice a sharp increase in response time after starting the latency attack, we can infer that the targeted instance is essential. Otherwise, we can increase the magnitude of the attack by running a blackhole attack, which halts all network traffic; or by running a shutdown attack. If neither of those attacks impacts our applications, we can mark it for termination and eliminate the cost entirely.

Conclusion

As we mentioned at the start of this article, Amazon EC2 is a complex service with a lot of moving parts. This isn’t an exhaustive list of cost-management strategies for EC2, but these techniques can drastically reduce the cost of an EC2 deployment. It’s more important now than ever to provide customers with reliable service while simultaneously reducing our costs, and Chaos Engineering allows us to do both safely and effectively.