More and more teams are moving away from monolithic applications and towards microservice-based architectures. As part of this transition, development teams are taking more direct ownership over their applications, including their deployment and operation in production. A major challenge these teams face isn't in getting their code into production (we have containers to thank for that), but in making sure their services are reliable.

In this blog, we'll present methods for defining, quantifying, and tracking the reliability of your services.

What is a service?

A service is a set of functionality provided by one or more systems within an environment. Multiple services can be connected to form a larger application, yet each service can be developed, deployed, and managed independently of others. This method of building applications is called a microservice architecture.

A service is a set of functionality provided by one or more systems within an environment. Microservice applications consist of multiple connected services.

By design, services are:

- Small, lightweight, easy to deploy, and easy to recreate.

- Loosely coupled and operated independently of each other.

- Managed by individual development teams rather than entire departments or dedicated admins.

The most common method of implementing services today is with containers and container orchestrators like Kubernetes. Containers allow developers to package their code and dependencies into lightweight, discrete executables, which can then be deployed and scaled as needed. Container orchestrators provide a common platform for different teams to deploy their containers to, while automating common functionality like networking, service discovery, and resource allocation.

A service doesn't necessarily have to be a container, though. Any collection of systems, components, or processes can be defined as a service. For example, if you have a Java application running simultaneously on two bare metal servers with a load balancer directing traffic between them, then that's a service. Likewise, if you have a Kubernetes Deployment running two instances of the same container, that's also a service.

Why is service reliability important?

A service might only be one small part of a much larger application, but that doesn't reduce its importance. A service might provide functionality that other services require in order to operate, making it a dependency. If your service fails, it could have a cascading effect that takes down other services and potentially the entire application.

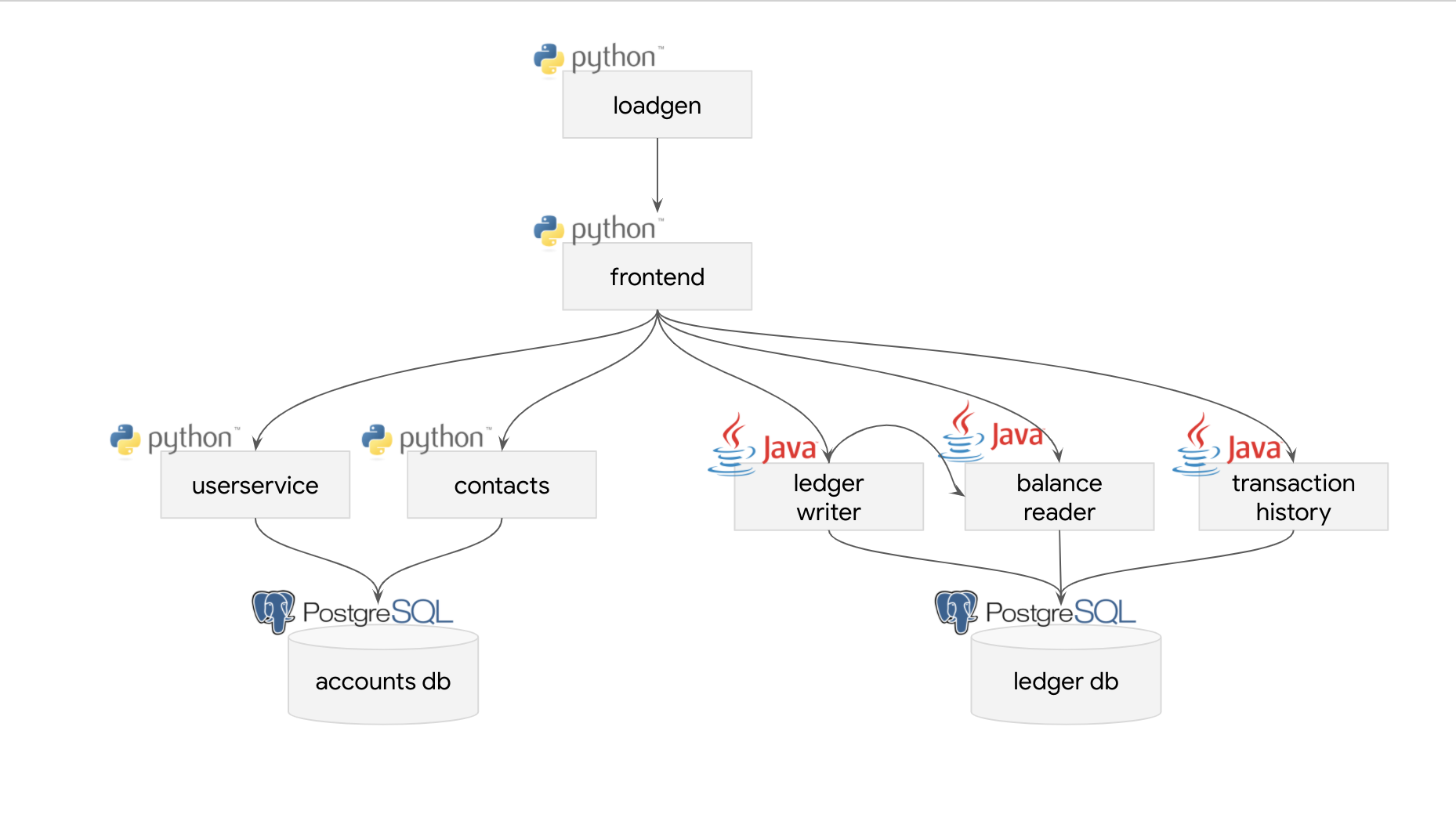

For example, imagine you're a developer at a bank, specifically the Bank of Anthos. Your team owns the balance-reader service, which is used to retrieve a customer's account balance from the ledger. The balance-reader service is called directly by the frontend and ledger-writer services, making it a dependency of those two services. Now imagine that your balance-reader service fails for some reason. How does this impact the frontend and ledger-writer? We can make a basic assumption that users will no longer be able to view their balance, but do we know what the website or mobile banking app will display? Also, what happens if a customer tries to make a transaction? Will the ledger-writer notice that balance-reader is down and return an error, will it timeout, or will it fail silently? Will the frontend block the transaction, display an error, or complete the transaction anyway?

There's a chance that neither the frontend service nor the ledger-writer have error handling in place to detect this type of outage. In this case, they may start displaying critical errors, throwing unhandled exceptions, or crashing. Is it your fault that these services failed because your balance-reader failed? Not at all, it's up to those teams to make sure their services can withstand outages. Nonetheless, taking the time to make your own service as reliable as possible can only benefit everyone.

Learn how to uncover and improve your application's critical services in our blog Understanding your application's critical path.

That said, how exactly do you make a service more reliable, and how can you measure reliability in a meaningful way? We'll answer these next.

How do you measure service reliability?

Like a monolithic application, measuring the reliability of a service requires using observability to gather metrics. However, services add some additional complexities:

- Containers are almost like mini-servers in that they have their own computing resource pool, process space, and network address space.

- Services can consist of multiple containers distributed across different servers.

- Containers can scale up and down on demand, which means a container may no longer exist by the time we see its metrics in our observability tool.

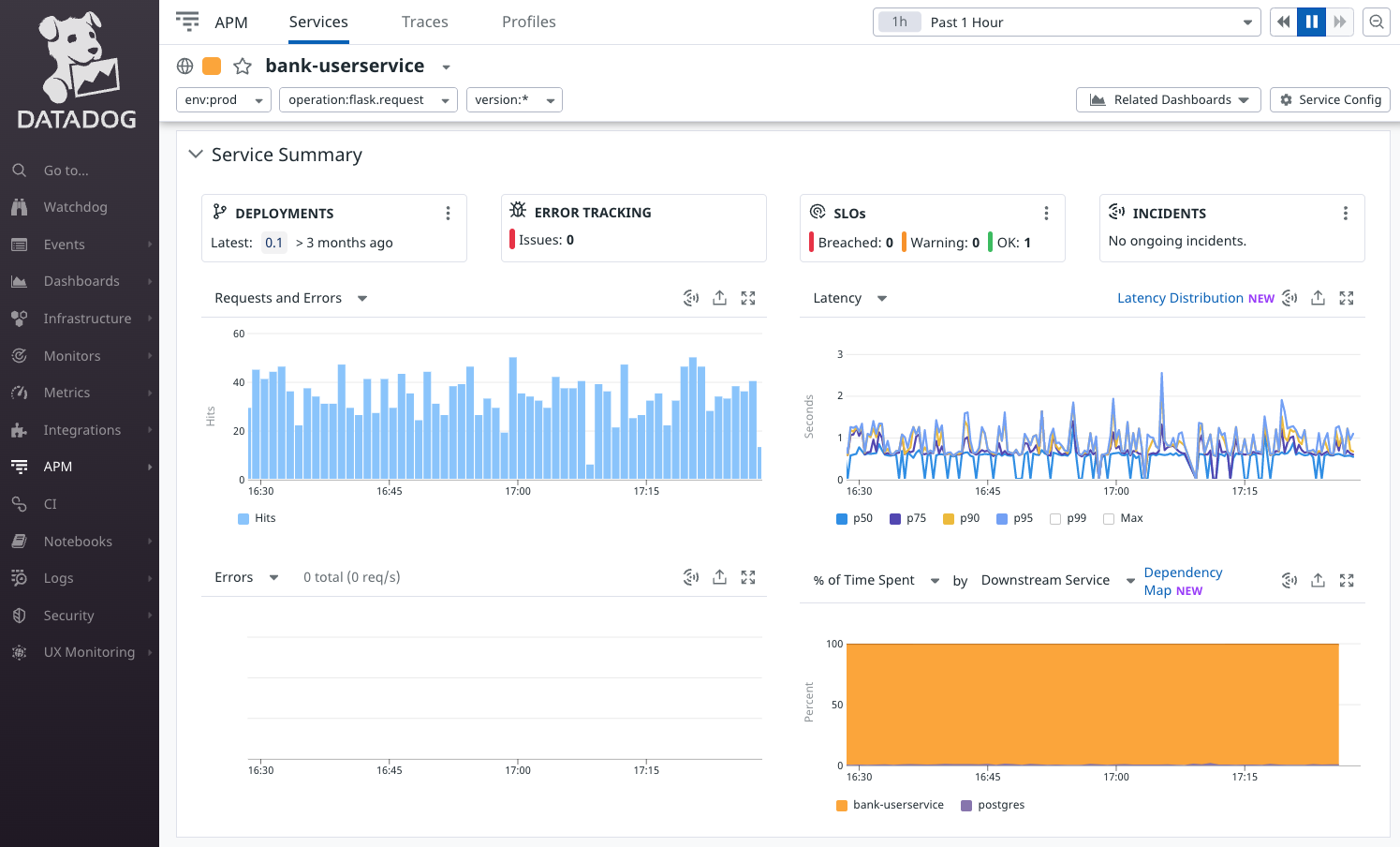

The best way to get a complete view of a service is by consolidating metrics from different containers into a monitoring or APM tool.

Choosing the right metrics

There's a lot of data to collect about a service, but only a few of them are relevant to our team and to our users. According to Google, there are Four Golden Signals to start with: latency, traffic, errors, and saturation.

- Latency is the amount of time between when a user sends a request and when the service responds. Lower latencies are better, since this indicates that users are being served faster.

- Traffic is the amount of demand the service is handling. This is often measured in HTTP requests per second, connections per second, or bandwidth usage. We can also use metrics specific to our service, such as transactions processed per minute.

- Errors is the rate of requests that failed. The criteria for a failure depends on the service, but the most common is when a service responds to a request with an HTTP 5XX error code.

- Saturation is the amount of resources your service is consuming out of all the resources available to it. Remember that your containers may only have a limited amount of resources allocated to them. High saturation indicates that you might need to scale up your service by increasing your container resource limits, adding additional container replicas, or increasing the capacity of your servers.

You'll notice there aren't any resource-specific metrics like CPU usage, RAM usage, or disk usage. Now that teams have access to near-infinitely scalable cloud computing platforms, monitoring low-level resource consumption has become less relevant than monitoring user experience metrics like latency. This doesn't mean these metrics are irrelevant—for instance, monitoring CPU usage is necessary for monitoring saturation—but don't rely on them as your sole form of observability.

How do you validate service reliability?

Now that you know what to measure and how to measure it, you can start running reliability tests to validate that your service is reliable. This will let you answer questions such as:

- Does my service remain available even if I lose one or more containers?

- If one of my hosts fails unexpectedly, will my service automatically migrate to another host? And do I have enough replicas running on other hosts to avoid downtime?

- How does the user experience change when there's an additional 10 milliseconds of network latency? What about 100 ms? 1000 ms?

Scenarios like these happen more frequently than you might expect. For example, in September 2021, AWS had an incident in its us-east-1 availability zone (AZ) where new EC2 instances failed to launch, and existing instances had issues accessing data stored on attached EBS volumes. Their recommendation to customers was to fail over to another availability zone, but many weren't prepared to do so, and so their applications went down as a result.

Imagine if you were using EC2 at that time. Maybe you were using Amazon Elastic Kubernetes Service (EKS) and hosting your Kubernetes cluster on EC2. If one of your instances failed due to the EBS problem, how confident would you be that your service would keep running? Are you confident that you have enough replicas running to handle the shift in user traffic, or that you have nodes running in a separate AZ capable of taking over for the failed node? This is where tools like Gremlin come in by running tests that demonstrate how well you meet these reliability requirements.

With Gremlin, the question changes from "how do I know my service is reliable" to "how do I make my service more reliable?" Gremlin performs the tests necessary to ensure your service is reliable and provides a complete measurement in the form of a reliability score. Simply define your service, integrate your monitoring or observability tool, and specify the golden signals that Gremlin should monitor throughout the test. Then, use our one-click tool to run reliability tests like AZ failover, CPU consumption, or dependency latency to validate that your service is reliable. This way, you can not only feel confident about your own service, but also demonstrate to your team that all of your reliability work is paying off.

Conclusion

Like it or not, modern applications are built on services. As ownership and control over services shifts away from operations teams and more towards developers, it's more important than ever that teams work reliability into their workflows. Proactively improving the reliability of your own services demonstrates to your customers and co-workers that your service is dependable.