What happens when the DNS service we use is suddenly unavailable? Users of Dyn found out when the company was attacked by a Distributed Denial of Service (DDoS). In this third post of a series, we take a blameless look at the Dyn outage, seeking to learn from it things we can do to enhance the reliability of our own systems.

What are DNS Servers and What Does DNS Stand For?

There’s an old joke among systems administrators, “It’s always DNS’s Fault!” What are they talking about? Simply put, networking. If networking fails, applications don’t work, especially in today’s distributed, microservices, cloud-computing environments.

DNS, the Domain Name System, is what allows us to use names to connect to websites instead of having to remember and input IP addresses. It works like a phone book or maybe a translator, accepting human-readable input, specifically hostnames like gremlin.com, and returning registered IP addresses associated with those names. This action is called resolving, so DNS servers are often called DNS resolvers.

The Domain Name System is a distributed service, meaning that there are many providers. The most important are the root DNS servers, which are a redundant set of authoritative master copies of the official record. These are hosted in multiple secure sites, all with high-bandwidth access and scattered across the globe.

For convenience and speed, internet service providers (ISPs) typically provide their own local DNS servers, which sync with root DNS servers regularly and then mirror the data. This is faster for customers to access websites and reduces the load on the root servers.

In addition, many companies, especially the larger ones, run their own nameservers for internal use. This is again for speed and convenience, and sometimes for the ability to filter traffic and prevent connections to unwanted addresses. Other companies offload the complexity and burden of running DNS servers to a third party provider, like Dyn.

Who is Dyn?

Dyn is a long-term respected provider of managed internet domain name services on the internet. They were founded in 2001 as a community-led project by undergraduate students at Worcester Polytechnic Institute. Dyn was purchased by Oracle in November of 2016. In June 2019, Oracle announced plans to shut down Dyn DNS services on May 31, 2020 in favor of their “enhanced,” paid subscription version on the Oracle Cloud Infrastructure platform.

What Happened in the DDoS Attack?

On October 21, 2016, Dyn was the victim of a series of Distributed Denial of Service (DDoS) attacks. Because many companies used Dyn to host their DNS, this incident impacted services and users across the internet since attempts to access sites and services using domain names resolved by Dyn returned a DNS error.

Dyn described the incident as, “a sophisticated, highly distributed attack involving 10s of millions of IP addresses.” According to Dyn the attackers used a Mirai botnet.

What is a Botnet?

A bot is software that automates repetitive tasks. They are usually scripts. Examples include search engine web crawlers and instant messaging chatbots that respond to simple questions. Bots can also be written for darker tasks. A botnet is a network of internet-connected devices running the same bot, generally for a sinister purpose. A DDoS is often perpetrated by using a botnet to overwhelm a targeted site with a flood of traffic, more than the site can handle. Amazon AWS was targeted while this article was being written and the attack resulted in DNS errors. With today’s blooming internet of things (IoT) where more and more devices are being sold with always-on internet connections, it is easier to find machines to subvert into a botnet, increasing the potential attack intensity.

The botnet flooded the Dyn network with connections to the port 53, the network port used for DNS resolution, commonly called the DNS port. Dyn’s postmortem analysis states that, “Early observations of the TCP attack volume from a few of our datacenters indicate packet flow bursts 40 to 50 times higher than normal. This magnitude does not take into account a significant portion of traffic that never reached Dyn due to our own mitigation efforts as well as the mitigation of upstream providers. There have been some reports of a magnitude in the 1.2 Tbps range; at this time we are unable to verify that claim.”

[ Related: Recreating 3 Common Outages with Gremlin Scenarios ]

At least two waves of attacks occurred (some media reported a third wave of attacks but that may have been residual impacts of the earlier attacks). In each case Dyn was able to identify the attacks and mitigate them to restore service, but not before a lot of damage had been done. Many companies were impacted, and some switched to different DNS providers as a result. Dyn was the target of another attack on November 7, 2016, and stated that they were “constantly dealing with DDoS attacks.”

Here is a timeline of events for the October 21, 2016 attack (All times are in UTC):

11:10 - First wave of the DDoS attack kicks off

13:20 - Dyn is able to mitigate the attack and restore service

15:50 - Second “more globally diverse” wave of the attacks begins

17:00 - Second wave of attacks is largely mitigated

20:30 - Residual impacts of the attack are resolved

Which Customers Were Impacted by the DNS Unavailability?

According to Wikipedia, services for these customers were all impacted: Airbnb, Amazon.com, Ancestry.com, The A.V. Club, BBC, The Boston Globe, Box, Business Insider, CNN, Comcast, CrunchBase, DirecTV, The Elder Scrolls Online, Electronic Arts, Etsy, FiveThirtyEight, Fox News, The Guardian, GitHub, Grubhub, HBO, Heroku, HostGator, iHeartRadio, Imgur, Indiegogo, Mashable, National Hockey League, Netflix, The New York Times, Overstock.com, PayPal, Pinterest, Pixlr, PlayStation Network, Qualtrics, Quora, Reddit, Roblox, Ruby Lane, RuneScape, SaneBox, Seamless, Second Life, Shopify, Slack, SoundCloud, Squarespace, Spotify, Starbucks, Storify, Swedish Civil Contingencies Agency, Swedish Government, Tumblr, Twilio, Twitter, Verizon Communications, Visa, Vox Media, Walgreens, The Wall Street Journal, Wikia, Wired, Wix.com, WWE Network, Xbox Live, and Yammer.

Contributing Factors to the DNS Failure

- The DDoS attack was a large botnet attack that happened in waves, and it was attacking port 53, the port that Dyn's servers were using to serve responses to DNS requests. The attack used “maliciously targeted, masked TCP and UDP traffic over port 53,” according to Dyn. Dyn also said the attack “generated compounding recursive DNS retry traffic, further exacerbating its impact.”

- Dyn was one of the top DNS providers on the internet, and its services were used by many other companies.

- Companies that depended on a single DNS provider were greatly impacted during the attacks.

- Many companies had not tested what happened if their DNS provider failed, and what their response might be.

- Along with DNS records like the A and AAAA records, the CNAME record, the MX record, and the NS record, there is an SOA record which includes a Time To Live (TTL) field. The TTL setting in the SOA record controls how long a DNS server will cache records. Companies with low TTLs set for their DNS records would have likely been impacted more by the Dyn incident, as DNS servers would have cached their DNS records for shorter periods. On the other hand, longer TTL settings makes changed DNS records take longer to propagate.

Retrospective Response

Dyn carried out mitigation during the attacks, including: “traffic-shaping incoming traffic, rebalancing of that traffic by manipulation of anycast policies, application of internal filtering and deployment of scrubbing services.” After the attacks, Dyn stated it, “will continue to conduct analysis, given the complexity and severity of this attack. We very quickly put protective measures in place during the attack, and we are extending and scaling those measures aggressively. Additionally, Dyn has been active in discussions with internet infrastructure providers to share learnings and mitigation methods.”

For customers of Dyn, the attacks exposed that having a single managed DNS provider was a single point of failure. Some companies merely switched from Dyn to a competitor’s managed DNS services, but that doesn’t solve the larger problem. Other companies began to rely on multiple providers to eliminate the single point of failure.

How Can I Reproduce a DNS Outage with Gremlin for Reliability?

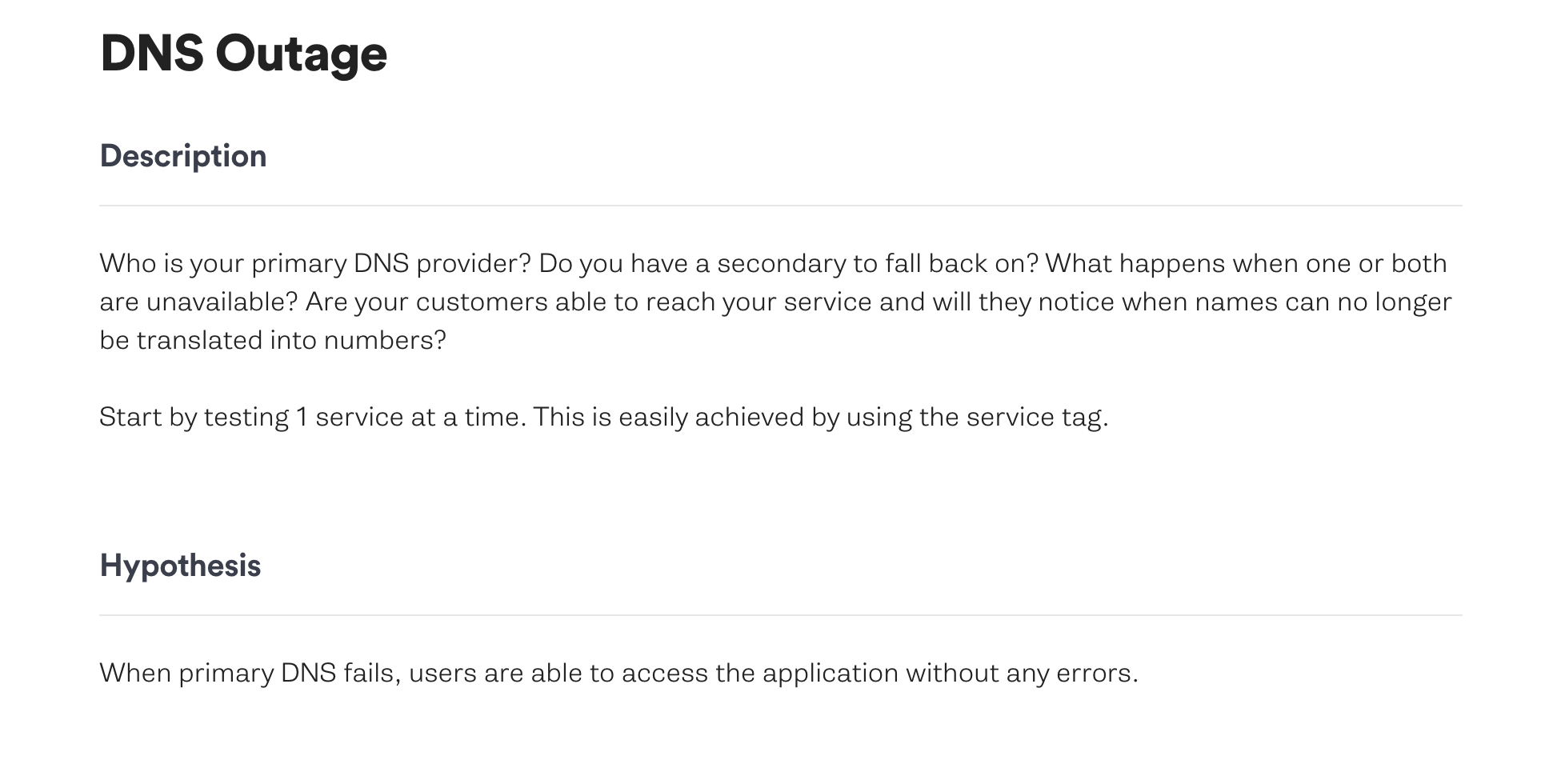

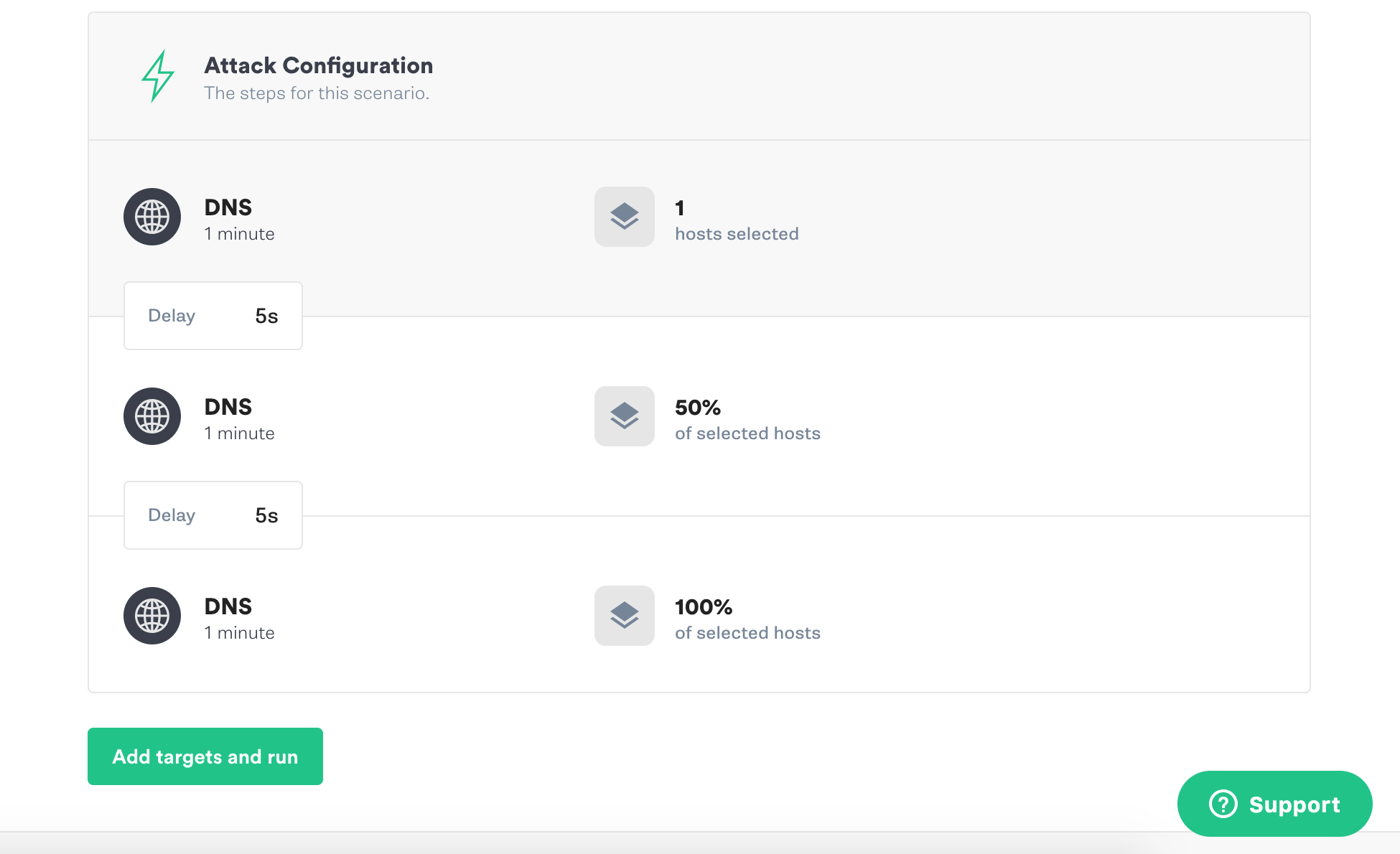

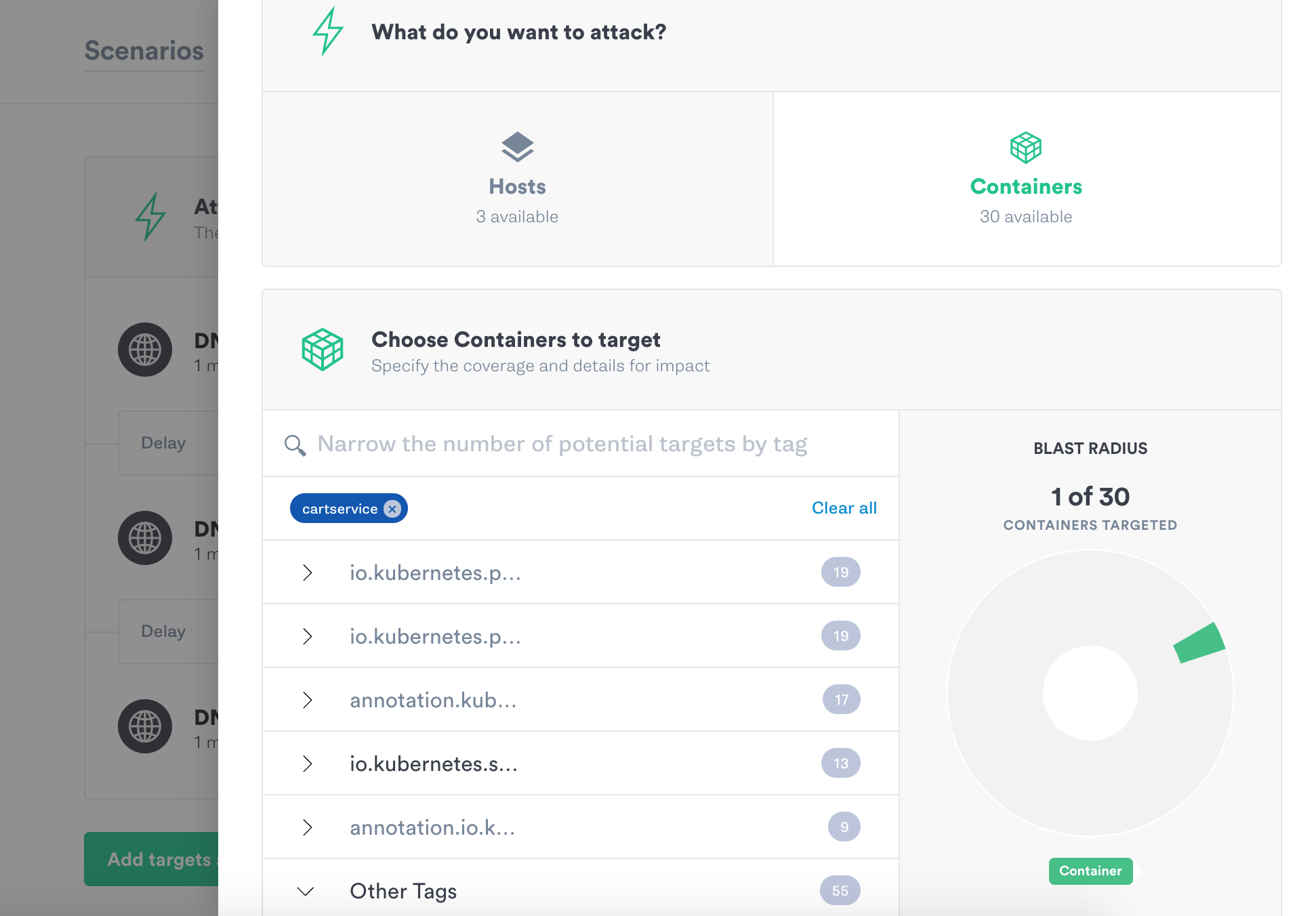

Gremlin has a way to perform a blast-radius controlled, carefully crafted Chaos Engineering experiment with a growing magnitude over time using Scenarios, including a set of recommended scenarios. The DNS Outage recommended scenario can reproduce the impact of a DNS provider failure on your systems.

This scenario uses Gremlin’s DNS attack to block access to DNS servers.

Your selected hosts or containers will be unable to retrieve DNS information for the amount of time you select.

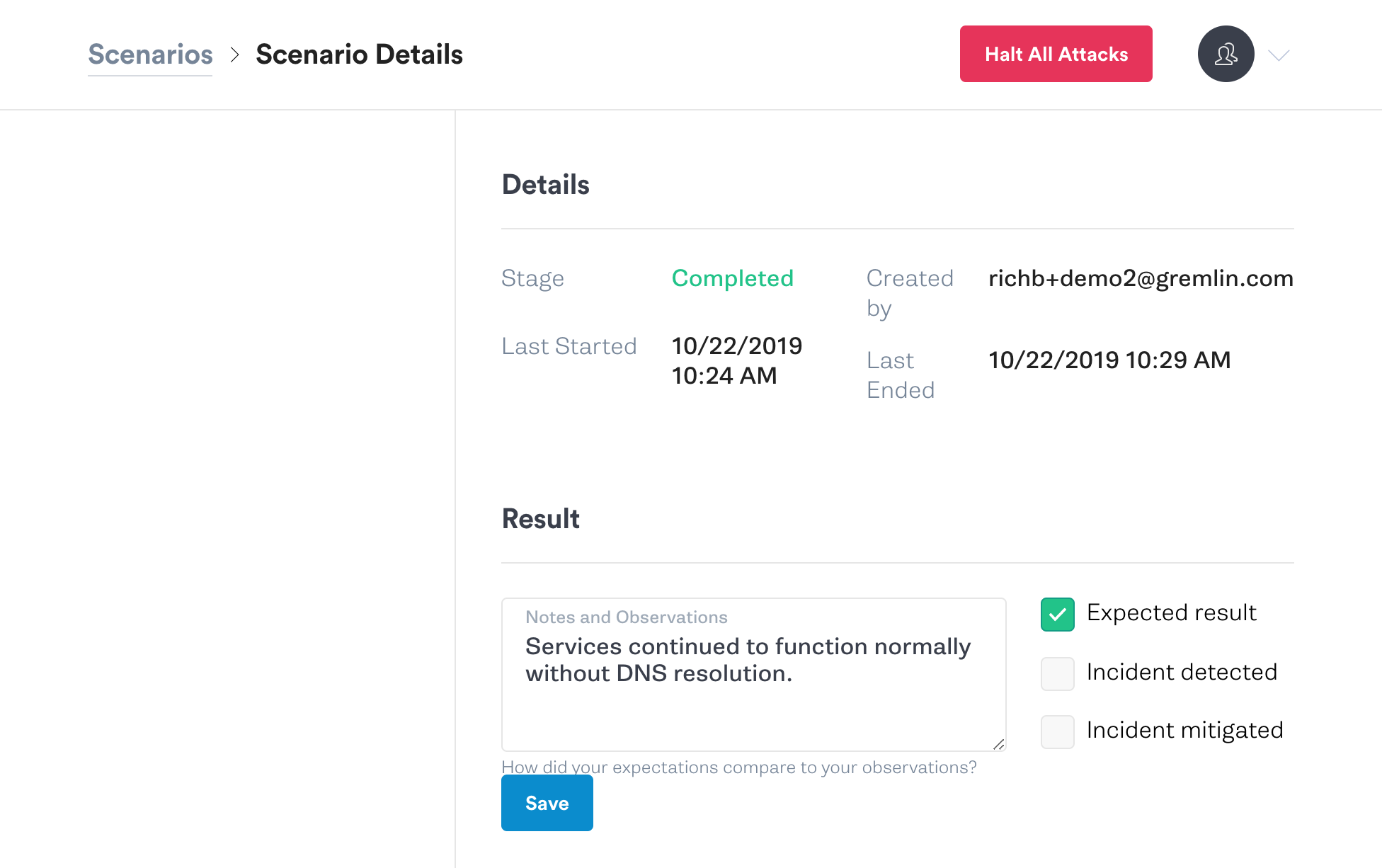

Combined with monitoring data collected before, during, and after the attacks are run, you will have the information you need to enhance your system for reliability in the event of the loss of a DNS provider.

What DNS Reliability Enhancements Might I Want to Make?

Failure happens. In complex systems, it is inevitable that a component of the system will fail from time to time. Anticipating those failures and planning for reliability is vital for uptime and customer happiness.

Here are two DNS-related reliability enhancements to consider:

- Use redundant and multiple DNS providers. This can mean using an external DNS provider as the primary DNS provider and also running your own DNS provider internally as a secondary DNS backup. It can mean using two external DNS providers. No matter how you decide to set it up, you should have a failover scheme and DNS failure mitigation in place.

- Secure your DNS servers and keep them updated. Running your own DNS server is only a good idea if you commit to proper security measures. This is a significant task, which is why third party providers are common and useful. This includes using DNS Security Extensions (DNSSEC).